Designing a MOOC: how far did it reach? #csed

Posted: June 10, 2015 Filed under: Education, Opinion | Tags: advocacy, authenticity, blogging, collaboration, community, computer science education, constructivist, contributing student pedagogy, curriculum, data visualisation, design, education, educational problem, educational research, ethics, feedback, higher education, in the student's head, learning, measurement, MOOC, moocs, principles of design, reflection, resources, students, teaching, teaching approaches, thinking, tools Leave a commentMark Guzdial posted over on his blog on “Moving Beyond MOOCS: Could we move to understanding learning and teaching?” and discusses aspects (that still linger) of MOOC hype. (I’ve spoken about MOOCs done badly before, as well as recording the thoughts of people like Hugh Davis from Southampton.) One of Mark’s paragraphs reads:

“The value of being in the front row of a class is that you talk with the teacher. Getting physically closer to the lecturer doesn’t improve learning. Engagement improves learning. A MOOC puts everyone at the back of the class, listening only and doing the homework”

My reply to this was:

“You can probably guess that I have two responses here, the first is that the front row is not available to many in the real world in the first place, with the second being that, for far too many people, any seat in the classroom is better than none.

But I am involved in a, for us, large MOOC so my responses have to be regarded in that light. Thanks for the post!”

Mark, of course, called my bluff and responded with:

“Nick, I know that you know the literature in this space, and care about design and assessment. Can you say something about how you designed your MOOC to reach those who would not otherwise get access to formal educational opportunities? And since your MOOC has started, do you know yet if you achieved that goal — are you reaching people who would not otherwise get access?”

So here is that response. Thanks for the nudge, Mark! The answer is a bit long but please bear with me. We will be posting a longer summary after the course is completed, in a month or so. Consider this the unedited taster. I’m putting this here, early, prior to the detailed statistical work, so you can see where we are. All the numbers below are fresh off the system, to drive discussion and answering Mark’s question at, pretty much, a conceptual level.

First up, as some background for everyone, the MOOC team I’m working with is the University of Adelaide‘s Computer Science Education Research group, led by A/Prof Katrina Falkner, with me (Dr Nick Falkner), Dr Rebecca Vivian, and Dr Claudia Szabo.

I’ll start by noting that we’ve been working to solve the inherent scaling issues in the front of the classroom for some time. If I had a class of 12 then there’s no problem in engaging with everyone but I keep finding myself in rooms of 100+, which forces some people to sit away from me and also limits the number of meaningful interactions I can make to individuals in one setting. While I take Mark’s point about the front of the classroom, and the associated research is pretty solid on this, we encountered an inherent problem when we identified that students were better off down the front… and yet we kept teaching to rooms with more student than front. I’ll go out on a limb and say that this is actually a moral issue that we, as a sector, have had to look at and ignore in the face of constrained resources. The nature of large spaces and people, coupled with our inability to hover, means that we can either choose to have a row of students effectively in a semi-circle facing us, or we accept that after a relatively small number of students or number of rows, we have constructed a space that is inherently divided by privilege and will lead to disengagement.

So, Katrina’s and my first foray into this space was dealing with the problem in the physical lecture spaces that we had, with the 100+ classes that we had.

Katrina and I published a paper on “contributing student pedagogy” in Computer Science Education 22 (4), 2012, to identify ways for forming valued small collaboration groups as a way to promote engagement and drive skill development. Ultimately, by reducing the class to a smaller number of clusters and making those clusters pedagogically useful, I can then bring the ‘front of the class’-like experience to every group I speak to. We have given talks and applied sessions on this, including a special session at SIGCSE, because we think it’s a useful technique that reduces the amount of ‘front privilege’ while extending the amount of ‘front benefit’. (Read the paper for actual detail – I am skimping on summary here.)

We then got involved in the support of the national Digital Technologies curriculum for primary and middle school teachers across Australia, after being invited to produce a support MOOC (really a SPOC, small, private, on-line course) by Google. The target learners were teachers who were about to teach or who were teaching into, initially, Foundation to Year 6 and thus had degrees but potentially no experience in this area. (I’ve written about this before and you can find more detail on this here, where I also thanked my previous teachers!)

The motivation of this group of learners was different from a traditional MOOC because (a) everyone had both a degree and probable employment in the sector which reduced opportunistic registration to a large extent and (b) Australian teachers are required to have a certain number of professional development (PD) hours a year. Through a number of discussions across the key groups, we had our course recognised as PD and this meant that doing our course was considered to be valuable although almost all of the teachers we spoke to were furiously keen for this information anyway and my belief is that the PD was very much ‘icing’ rather than ‘cake’. (Thank you again to all of the teachers who have spent time taking our course – we really hope it’s been useful.)

To discuss access and reach, we can measure teachers who’ve taken the course (somewhere in the low thousands) and then estimate the number of students potentially assisted and that’s when it gets a little crazy, because that’s somewhere around 30-40,000.

In his talk at CSEDU 2014, Hugh Davis identified the student groups who get involved in MOOCs as follows. The majority of people undertaking MOOCs were life-long learners (older, degreed, M/F 50/50), people seeking skills via PD, and those with poor access to Higher Ed. There is also a small group who are Uni ‘tasters’ but very, very small. (I think we can agree that tasting a MOOC is not tasting a campus-based Uni experience. Less ivy, for starters.) The three approaches to the course once inside were auditing, completing and sampling, and it’s this final one that I want to emphasise because this brings us to one of the differences of MOOCs. We are not in control of when people decide that they are satisfied with the free education that they are accessing, unlike our strong gatekeeping on traditional courses.

I am in total agreement that a MOOC is not the same as a classroom but, also, that it is not the same as a traditional course, where we define how the student will achieve their goals and how they will know when they have completed. MOOCs function far more like many people’s experience of web browsing: they hunt for what they want and stop when they have it, thus the sampling engagement pattern above.

(As an aside, does this mean that a course that is perceived as ‘all back of class’ will rapidly be abandoned because it is distasteful? This makes the student-consumer a much more powerful player in their own educational market and is potentially worth remembering.)

Knowing these different approaches, we designed the individual subjects and overall program so that it was very much up to the participant how much they chose to take and individual modules were designed to be relatively self-contained, while fitting into a well-designed overall flow that built in terms of complexity and towards more abstract concepts. Thus, we supported auditing, completing and sampling, whereas our usual face-to-face (f2f) courses only support the first two in a way that we can measure.

As Hugh notes, and we agree through growing experience, marking/progress measures at scale are very difficult, especially when automated marking is not enough or not feasible. Based on our earlier work in contributing collaboration in the class room, for the F-6 Teacher MOOC we used a strong peer-assessment model where contributions and discussions were heavily linked. Because of the nature of the cohort, geographical and year-level groups formed who then conducted additional sessions and produced shared material at a slightly terrifying rate. We took the approach that we were not telling teachers how to teach but we were helping them to develop and share materials that would assist in their teaching. This reduced potential divisions and allows us to establish a mutually respectful relationship that facilitated openness.

(It’s worth noting that the courseware is creative commons, open and free. There are people reassembling the course for their specific take on the school system as we speak. We have a national curriculum but a state-focused approach to education, with public and many independent systems. Nobody makes any money out of providing this course to teachers and the material will always be free. Thank you again to Google for their ongoing support and funding!)

Overall, in this first F-6 MOOC, we had higher than usual retention of students and higher than usual participation, for the reasons I’ve outlined above. But this material was for curriculum support for teachers of young students, all of whom were pre-programming, and it could be contained in videos and on-line sharing of materials and discussion. We were also in the MOOC sweet-spot: existing degreed learners, PD driver, and their PD requirement depended on progressive demonstration on goal achievement, which we recognised post-course with a pre-approved certificate form. (Important note: if you are doing this, clear up how the PD requirements are met and how they need to be reported back, as early on as you can. It meant that we could give people something valuable in a short time.)

The programming MOOC, Think. Create. Code on EdX, was more challenging in many regards. We knew we were in a more difficult space and would be more in what I shall refer to as ‘the land of the average MOOC consumer’. No strong focus, no PD driver, no geographically guaranteed communities. We had to think carefully about what we considered to be useful interaction with the course material. What counted as success?

To start with, we took an image-based approach (I don’t think I need to provide supporting arguments for media-driven computing!) where students would produce images and, over time, refine their coding skills to produce and understand how to produce more complex images, building towards animation. People who have not had good access to education may not understand why we would use programming in more complex systems but our goal was to make images and that is a fairly universally understood idea, with a short production timeline and very clear indication of achievement: “Does it look like a face yet?”

In terms of useful interaction, if someone wrote a single program that drew a face, for the first time – then that’s valuable. If someone looked at someone else’s code and spotted a bug (however we wish to frame this), then that’s valuable. I think that someone writing a single line of correct code, where they understand everything that they write, is something that we can all consider to be valuable. Will it get you a degree? No. Will it be useful to you in later life? Well… maybe? (I would say ‘yes’ but that is a fervent hope rather than a fact.)

So our design brief was that it should be very easy to get into programming immediately, with an active and engaged approach, and that we have the same “mostly self-contained week” approach, with lots of good peer interaction and mutual evaluation to identify areas that needed work to allow us to build our knowledge together. (You know I may as well have ‘social constructivist’ tattooed on my head so this is strongly in keeping with my principles.) We wrote all of the materials from scratch, based on a 6-week program that we debated for some time. Materials consisted of short videos, additional material as short notes, participatory activities, quizzes and (we planned for) peer assessment (more on that later). You didn’t have to have been exposed to “the lecture” or even the advanced classroom to take the course. Any exposure to short videos or a web browser would be enough familiarity to go on with.

Our goal was to encourage as much engagement as possible, taking into account the fact that any number of students over 1,000 would be very hard to support individually, even with the 5-6 staff we had to help out. But we wanted students to be able to develop quickly, share quickly and, ultimately, comment back on each other’s work quickly. From a cognitive load perspective, it was crucial to keep the number of things that weren’t relevant to the task to a minimum, as we couldn’t assume any prior familiarity. This meant no installers, no linking, no loaders, no shenanigans. Write program, press play, get picture, share to gallery, winning.

As part of this, our support team (thanks, Jill!) developed a browser-based environment for Processing.js that integrated with a course gallery. Students could save their own work easily and share it trivially. Our early indications show that a lot of students jumped in and tried to do something straight away. (Processing is really good for getting something up, fast, as we know.) We spent a lot of time testing browsers, testing software, and writing code. All of the recorded materials used that development environment (this was important as Processing.js and Processing have some differences) and all of our videos show the environment in action. Again, as little extra cognitive load as possible – no implicit requirement for abstraction or skills transfer. (The AdelaideX team worked so hard to get us over the line – I think we may have eaten some of their brains to save those of our students. Thank you again to the University for selecting us and to Katy and the amazing team.)

The actual student group, about 20,000 people over 176 countries, did not have the “built-in” motivation of the previous group although they would all have their own levels of motivation. We used ‘meet and greet’ activities to drive some group formation (which worked to a degree) and we also had a very high level of staff monitoring of key question areas (which was noted by participants as being very high for EdX courses they’d taken), everyone putting in 30-60 minutes a day on rotation. But, as noted before, the biggest trick to getting everyone engaged at the large scale is to get everyone into groups where they have someone to talk to. This was supposed to be provided by a peer evaluation system that was initially part of the assessment package.

Sadly, the peer assessment system didn’t work as we wanted it to and we were worried that it would form a disincentive, rather than a supporting community, so we switched to a forum-based discussion of the works on the EdX discussion forum. At this point, a lack of integration between our own UoA programming system and gallery and the EdX discussion system allowed too much distance – the close binding we had in the R-6 MOOC wasn’t there. We’re still working on this because everything we know and all evidence we’ve collected before tells us that this is a vital part of the puzzle.

In terms of visible output, the amount of novel and amazing art work that has been generated has blown us all away. The degree of difference is huge: armed with approximately 5 statements, the number of different pieces you can produce is surprisingly large. Add in control statements and reputation? BOOM. Every student can write something that speaks to her or him and show it to other people, encouraging creativity and facilitating engagement.

From the stats side, I don’t have access to the raw stats, so it’s hard for me to give you a statistically sound answer as to who we have or have not reached. This is one of the things with working with a pre-existing platform and, yes, it bugs me a little because I can’t plot this against that unless someone has built it into the platform. But I think I can tell you some things.

I can tell you that roughly 2,000 students attempted quiz problems in the first week of the course and that over 4,000 watched a video in the first week – no real surprises, registrations are an indicator of interest, not a commitment. During that time, 7,000 students were active in the course in some way – including just writing code, discussing it and having fun in the gallery environment. (As it happens, we appear to be plateauing at about 3,000 active students but time will tell. We have a lot of post-course analysis to do.)

It’s a mistake to focus on the “drop” rates because the MOOC model is different. We have no idea if the people who left got what they wanted or not, or why they didn’t do anything. We may never know but we’ll dig into that later.

I can also tell you that only 57% of the students currently enrolled have declared themselves explicitly to be male and that is the most likely indicator that we are reaching students who might not usually be in a programming course, because that 43% of others, of whom 33% have self-identified as women, is far higher than we ever see in classes locally. If you want evidence of reach then it begins here, as part of the provision of an environment that is, apparently, more welcoming to ‘non-men’.

We have had a number of student comments that reflect positive reach and, while these are not statistically significant, I think that this also gives you support for the idea of additional reach. Students have been asking how they can save their code beyond the course and this is a good indicator: ownership and a desire to preserve something valuable.

For student comments, however, this is my favourite.

I’m no artist. I’m no computer programmer. But with this class, I see I can be both. #processingjs (Link to student’s work) #code101x .

That’s someone for whom this course had them in the right place in the classroom. After all of this is done, we’ll go looking to see how many more we can find.

I know this is long but I hope it answered your questions. We’re looking forward to doing a detailed write-up of everything after the course closes and we can look at everything.

EduTech AU 2015, Day 2, Higher Ed Leaders, “Change and innovation in the Digital Age: the future is social, mobile and personalised.” #edutechau @timbuckteeth

Posted: June 3, 2015 Filed under: Education | Tags: advocacy, community, curriculum, design, education, educational problem, edutech2015, feedback, Generation Why, higher education, in the student's head, learning, Mayflower, Mayflower steps, measurement, Plymouth University, principles of design, reflection, resources, steve wheeler, student perspective, students, teaching, teaching approaches, technology, thinking, tools Leave a commentAnd heeere’s Steve Wheeler (@timbuckteeth)! Steve is an A/Prof of Learning Technologies at Plymouth in the UK. He and I have been at the same event before (CSEDU, Barcelona) and we seem to agree on a lot. Today’s cognitive bias warning is that I will probably agree with Steve a lot, again. I’ve already quizzed him on his talk because it looked like he was about to try and, as I understand it, what he wants to talk about is how our students can have altered expectations without necessarily becoming some sort of different species. (There are no Digital Natives. No, Prensky was wrong. Check out Helsper, 2010, from the LSE.) So, on to the talk and enough of my nonsense!

Steve claims he’s going to recap the previous speaker, but in an English accent. Ah, the Mayflower steps on the quayside in Plymouth, except that they’re not, because the real Mayflower steps are in a ladies’ loo in a pub, 100m back from the quay. The moral? What you expect to be getting is not always what you get. (Tourists think they have the real thing, locals know the truth.)

“Any sufficiently advanced technology is indistinguishable from magic” – Arthur C. Clarke.

Educational institutions are riddled with bad technology purchases where we buy something, don’t understand it, don’t support it and yet we’re stuck with it or, worse, try to teach with it when it doesn’t work.

Predicting the future is hard but, for educators, we can do it better if we look at:

- Pedagogy first

- Technology next (that fits the technology)

Steve then plugs his own book with a quote on technology not being a silver bullet.

But who will be our students? What are their expectations for the future? Common answers include: collaboration (student and staff), and more making and doing. They don’t like being talked at. Students today do not have a clear memory of the previous century, their expectations are based on the world that they are living in now, not the world that we grew up in.

Meet Student 2.0!

The average digital birth of children happens at about six months – but they can be on the Internet before they are born, via ultrasound photos. (Anyone who has tried to swipe or pinch-zoom a magazine knows why kids take to it so easily.) Students of today have tools and technology and this is what allows them to create, mash up, and reinvent materials.

What about Game Based Learning? What do children learn from playing games

Three biggest fears of teachers using technology

- How do I make this work?

- How do I avoid looking like an idiot?

- They will know more about it than I do.

Three biggest fears of students

- Bad wifi

- Spinning wheel of death

- Low battery

The laptops and devices you see in lectures are personal windows on the world, ongoing conversations and learning activities – it’s not purely inattention or anti-learning. Student questions on Twitter can be answered by people all around the world and that’s extending the learning dialogue out a long way beyond the classroom.

Voltaire said that we were products of our age. Walrick asks how we can prepare students for a future? Steve showed us a picture of him as a young boy, who had been turned off asking questions by a mocking teacher. But the last two years of his schooling were in Holland he went to the Philips flying saucer, which was a technology museum. There, he saw an early video conferencing system and that inspired him with a vision of the future.

Steve wanted to be an astronaut but his career advisor suggested he aim lower, because he wasn’t an American. The point is not that Steve wanted to be an astronaut but that he wanted to be an explorer, the role that he occupies now in education.

Steve shared a quote that education is “about teaching students not subjects” and he shared the awesome picture of ‘named quadrilaterals’. My favourite is ‘Bob. We have a very definite idea of what we want students to write as answer but we suppress creative answers and we don’t necessarily drive the approach to learning that we want.

Ignorance spreads happily by itself, we shouldn’t be helping it. Our visions of the future are too often our memories of what our time was, transferred into modern systems. Our solution spaces are restricted by our fixations on a specific way of thinking. This prevents us from breaking out of our current mindset and doing something useful.

What will the future be? It was multi-media, it was web, but where is it going? Mobile devices because the most likely web browser platform in 2013 and their share is growing.

What will our new technologies be? Thinks get smaller, faster, lighter as they mature. We have to think about solving problems in new ways.

Here’s a fire hose sip of technologies: artificial intelligence is on the way up, touch surfaces are getting better, wearables are getting smarter, we’re looking at remote presence, immersive environments, 3D printers are changing manufacturing and teaching, gestural computing, mind control of devices, actual physical implants into the body…

From Nova Spivak, we can plot information connectivity against social connectivity and we want is growth on both axes – a giant arrow point up to the top right. We don’t yet have a Web form that connects information, knowledge and people – i.e. linking intelligence and people. We’re already seeing some of this with recommenders, intelligent filtering, and sentiment tracking. (I’m still waiting for the Semantic Web to deliver, I started doing work on it in my PhD, mumble years ago.)

A possible topology is: infrastructure is distributed and virtualised, our interfaces are 3D and interactive, built onto mobile technology and using ‘intelligent’ systems underneath.

But you cannot assume that your students are all at the same level or have all of the same devices: the digital divide is as real and as damaging as any social divide. Steve alluded to the Personal Learning Networking, which you can read about in my previous blog on him.

How will teaching change? It has to move away from cutting down students into cloned templates. We want students to be self-directed, self-starting, equipped to capture information, collaborative, and oriented towards producing their own things.

Let’s get back to our roots:

- We learn by doing (Piaget, 1950)

- We learn by making (Papert, 1960)

Just because technology is making some of this doing and making easier doesn’t mean we’re making it worthless, it means that we have time to do other things. Flip the roles, not just the classroom. Let students’ be the teacher – we do learn by teaching. (Couldn’t agree more.)

Back to Papert, “The best learning takes place when students take control.” Students can reflect in blogging as they present their information a hidden audience that they are actually writing for. These physical and virtual networks grow, building their personal learning networks as they connect to more people who are connected to more people. (Steve’s a huge fan of Twitter. I’m not quite as connected as he is but that’s like saying this puddle is smaller than the North Sea.)

Some of our students are strongly connected and they do store their knowledge in groups and friendships, which really reflects how they find things out. This rolls into digital cultural capital and who our groups are.

(Then there was a steam of images at too high a speed for me to capture – go and download the slides, they’re creative commons and a lot of fun.)

Learners will need new competencies and literacies.

Always nice to hear Steve speak and, of course, I still agree with a lot of what he said. I won’t prod him for questions, though.

EduTech AU 2015, Day 2, Higher Ed Leaders, “Assessment: The Silent Killer of Learning”, #edutechau @eric_mazur

Posted: June 3, 2015 Filed under: Education | Tags: assessment, educational problem, educational research, edutech2015, edutechau, eric mazur, feedback, harvard, higher education, in the student's head, learning, peer instruction, plagiarism, student perspective, students, teaching, teaching approaches, thinking, time banking, tools, universal principles of design, workload 3 CommentsNo surprise that I’m very excited about this talk as well. Eric is a world renowned educator and physicist, having developed Peer Instruction in 1990 for his classes at Harvard as a way to deal with students not developing a working physicist’s approach to the content of his course. I should note that Eric also gave this talk yesterday and the inimitable Steve Wheeler blogged that one, so you should read Steve as well. But after me. (Sorry, Steve.)

I’m not an enormous fan of most of the assessment we use as most grades are meaningless, assessment becomes part of a carrot-and-stick approach and it’s all based on artificial timelines that stifle creativity. (But apart from that, it’s fine. Ho ho.) My pithy statement on this is that if you build an adversarial educational system, you’ll get adversaries, but if you bother to build a learning environment, you’ll get learning. One of the natural outcomes of an adversarial system is activities like cheating and gaming the system, because people start to treat beating the system as the goal itself, which is highly undesirable. You can read a lot more about my views on plagiarism here, if you like. (Warning: that post links to several others and is a bit of a wormhole.)

Now, let’s hear what Eric has to say on this! (My comments from this point on will attempt to contain themselves in parentheses. You can find the slides for his talk – all 62MB of them – from this link on his website. ) It’s important to remember that one of the reasons that Eric’s work is so interesting is that he is looking for evidence-based approaches to education.

Eric discussed the use of flashcards. A week after Flashcard study, students retain 35%. After two weeks, it’s almost gone. He tried to communicate this to someone who was launching a cloud-based flashcard app. Her response was “we only guarantee they’ll pass the test”.

*low, despairing chuckle from the audience*

Of course most students study to pass the test, not to learn, and they are not the same thing. For years, Eric has been bashing the lecture (yes, he noted the irony) but now he wants to focus on changing assessment and getting it away from rote learning and regurgitation. The assessment practices we use now are not 21st century focused, they are used for ranking and classifying but, even then, doing it badly.

So why are we assessing? What are the problems that are rampant in our assessment procedure? What are the improvements we can make?

How many different purposes of assessment can you think of? Eric gave us 90s to come up with a list. Katrina and I came up with about 10, most of which were serious, but it was an interesting question to reflect upon. (Eric snuck

- Rate and rank students

- Rate professor and course

- Motivate students to keep up with work

- Provide feedback on learning to students

- Provide feedback to instructor

- Provide instructional accountability

- Improve the teaching and learning.

Ah, but look at the verbs – they are multi-purpose and in conflict. How can one thing do so much?

So what are the problems? Many tests are fundamentally inauthentic – regurgitation in useless and inappropriate ways. Many problem-solving approaches are inauthentic as well (a big problem for computing, we keep writing “Hello, World”). What does a real problem look like? It’s an interruption in our pathway to our desired outcome – it’s not the outcome that’s important, it’s the pathway and the solution to reach it that are important. Typical student problem? Open the book to chapter X to apply known procedure Y to determine an unknown answer.

Shout out to Bloom’s! Here’s Eric’s slide to remind you.

Eric doesn’t think that many of us, including Harvard, even reach the Applying stage. He referred to a colleague in physics who used baseball problems throughout the course in assignments, until he reached the final exam where he ran out of baseball problems and used football problems. “Professor! We’ve never done football problems!” Eric noted that, while the audience were laughing, we should really be crying. If we can’t apply what we’ve learned then we haven’t actually learned i.

Eric sneakily put more audience participation into the talk with an open ended question that appeared to not have enough information to come up with a solution, as it required assumptions and modelling. From a Bloom’s perspective, this is right up the top.

Students loathe assumptions? Why? Mostly because we’ll give them bad marks if they get it wrong. But isn’t the ability to make assumptions a really important skill? Isn’t this fundamental to success?

Eric demonstrated how to tame the problem by adding in more constraints but this came at the cost of the creating stage of Bloom’s and then the evaluating and analysing. (Check out his slides, pages 31 to 40, for details of this.) If you add in the memorisation of the equation, we have taken all of the guts out of the problem, dropping down to the lowest level of Bloom’s.

But, of course, computers can do most of the hard work for that is mechanistic. Problems at the bottom layer of Bloom’s are going to be solved by machines – this is not something we should train 21st Century students for.

But… real problem solving is erratic. Riddled with fuzziness. Failure prone. Not guaranteed to succeed. Most definitely not guaranteed to be optimal. The road to success is littered with failures.

But, if you make mistakes, you lose marks. But if you’re not making mistakes, you’re very unlikely to be creative and innovative and this is the problem with our assessment practices.

Eric showed us a stress of a traditional exam room: stressful, isolated, deprived of calculators and devices. Eric’s joke was that we are going to have to take exams naked to ensure we’re not wearing smart devices. We are in a time and place where we can look up whatever we want, whenever we want. But it’s how you use that information that makes a difference. Why are we testing and assessing students under such a set of conditions? Why do we imagine that the result we get here is going to be any indicator at all of the likely future success of the student with that knowledge?

Cramming for exams? Great, we store the information in short-term memory. A few days later, it’s all gone.

Assessment produces a conflict, which Eric noticed when he started teaching a team and project based course. He was coaching for most of the course, switching to a judging role for the monthly fair. He found it difficult to judge them because he had a coach/judge conflict. Why do we combine it in education when it would be unfair or unpleasant in every other area of human endeavour? We hide between the veil of objectivity and fairness. It’s not a matter of feelings.

But… we go back to Bloom’s. The only thinking skill that can be evaluated truly objectively is remembering, at the bottom again.

But let’s talk about grade inflation and cheating. Why do people cheat at education when they don’t generally cheat at learning? But educational systems often conspire to rob us of our ownership and love of learning. Our systems set up situations where students cheat in order to succeed.

- Mimic real life in assessment practices!

Open-book exams. Information sticks when you need it and use it a lot. So use it. Produce problems that need it. Eric’s thought is you can bring anything you want except for another living person. But what about assessment on laptops? Oh no, Google access! But is that actually a problem? Any question to which the answer can be Googled is not an authentic question to determine learning!

Eric showed a video of excited students doing a statistic tests as a team-based learning activity. After an initial pass at the test, the individual response is collected (for up to 50% of the grade), and then students work as a group to confirm the questions against an IF AT scratchy card for the rest of the marks. Discussion, conversation, and the students do their own grading for you. They’ve also had the “A-ha!” moment. Assessment becomes a learning opportunity.

Eric’s not a fan of multiple choice so his Learning Catalytics software allows similar comparison of group answers without having to use multiple choice. Again, the team based activities are social, interactive and must less stressful.

- Focus on feedback, not ranking.

Objective ranking is a myth. The amount of, and success with, advanced education is no indicator of overall success in many regards. So why do we rank? Eric showed some graphs of his students (in earlier courses) plotting final grades in physics against the conceptual understanding of force. Some people still got top grades without understanding force as it was redefined by Newton. (For those who don’t know, Aristotle was wrong on this one.) Worse still is the student who mastered the concept of force and got a C, when a student who didn’t master force got an A. Objectivity? Injustice?

- Focus on skills, not content

Eric referred to Wiggins and McTighe, “Understanding by Design.” Traditional approach is course content drives assessment design. Wiggins advocates identifying what the outcomes are, formulate these as action verbs, ‘doing’ x rather than ‘understanding’ x. You use this to identify what you think the acceptable evidence is for these outcomes and then you develop the instructional approach. This is totally outcomes based.

- resolve coach/judge conflict

In his project-based course, Eric brought in external evaluators, leaving his coach role unsullied. This also validates Eric’s approach in the eyes of his colleagues. Peer- and self-evaluation are also crucial here. Reflective time to work out how you are going is easier if you can see other people’s work (even anonymously). Calibrated peer review, cpr.molsci.ucla.edu, is another approach but Eric ran out of time on this one.

If we don’t rethink assessment, the result of our assessment procedures will never actually provide vital information to the learner or us as to who might or might not be successful.

I really enjoyed this talk. I agree with just about all of this. It’s always good when an ‘internationally respected educator’ says it as then I can quote him and get traction in change-driving arguments back home. Thanks for a great talk!

EduTech AU 2015, Day 2, Higher Ed Leaders, “Innovation + Technology = great change to higher education”, #edutechau

Posted: June 3, 2015 Filed under: Education | Tags: education, higher education, learning, teaching, measurement, teaching approaches, educational problem, collaboration, resources, tools, design, principles of design, advocacy, mit, educational research, grand challenge, community, ethics, thinking, students, edutechau, edutech2015, olpc, one laptop per child, nicholas negroponte, mit media lab, seymour papert, Nicholas, connectivity, market forces, public education Leave a commentBig session today. We’re starting with Nicholas Negroponte, founder of the MIT Media Lab and the founder of One Laptop Per Child (OLPC), an initiative to create/provide affordable educational devices for children in the developing world. (Nicholas is coming to us via video conference, hooray, 21st Century, so this may or not work well in translation to blogging. Please bear with me if it’s a little disjointed.)

Nicholas would rather be here but he’s bravely working through his first presentation of this type! It’s going to be a presentation with some radical ideas so he’s hoping for conversation and debate. The presentation is broken into five parts:

- Learning learning. (Teaching and learning as separate entities.)

- What normal market forces will not do. (No real surprise that standard market forces won’t work well here.)

- Education without curricula. (Learning comes from many places and situations. Understanding and establishing credibility.)

- Where do new ideas come from? (How do we get them, how do we not get in the way.)

- Connectivity as a human right. (Is connectivity a human right or a means to rights such as education and healthcare? Human rights are free so that raises a lot of issues.

Nicholas then drilled down in “Learning learning”, starting with a reference to Seymour Papert, and Nicholas reflected on the sadness of the serious accident of Seymour’s health from a personal perspective. Nicholas referred to Papert’s and Minsky’s work on trying to understand how children and machines learned respectively. In 1968, Seymour started thinking about it and on April, 9, 1970, he gave a talk on his thoughts. Seymour realised that thinking about programs gave insight into thinking, relating to the deconstruction and stepwise solution building (algorithmic thinking) that novice programmers, such as children, had to go through.

These points were up on the screen as Nicholas spoke:

- Construction versus instruction

- Why reinventing the wheel is good

- Coding as thinking about thinking

How do we write code? Write it, see if it works, see which behaviours we have that aren’t considered working, change the code (in an informed way, with any luck) and try again. (It’s a little more complicated than that but that’s the core.) We’re now into the area of transferable skills – it appeared that children writing computer programs learned a skill that transferred over into their ability to spell, potentially from the methodical application of debugging techniques.

Nicholas talked about a spelling bee system where you would focus on the 8 out of 10 you got right and ignore the 2 you didn’t get. The ‘debugging’ kids would talk about the ones that they didn’t get right because they were analsysing their mistakes, as a peer group and as individual reflection.

Nicholas then moved on to the failure of market forces. Why does Finland do so well when they don’t have tests, homework and the shortest number of school hours per day and school days per year. One reason? No competition between children. No movement of core resources into the private sector (education as poorly functioning profit machine). Nicholas identified the core difference between the mission and the market, which beautifully summarises my thinking.

The OLPC program started in Cambodia for a variety of reasons, including someone associated with the lab being a friend of the King. OLPC laptops could go into areas where the government wasn’t providing schools for safety reasons, as it needed minesweepers and the like. Nicholas’ son came to Cambodia from Italy to connect up the school to the Internet. What would the normal market not do? Telecoms would come and get cheaper. Power would come and get cheaper. Laptops? Hmm. The software companies were pushing the hardware companies, so they were both caught in a spiral of increasing power consumption for utility. Where was the point where we could build a simple laptop, as a mission of learning, that could have a smaller energy footprint and bring laptops and connectivity to billions of people.

This is one of the reasons why OLPC is a non-profit – you don’t have to sell laptops to support the system, you’re supporting a mission. You didn’t need to sell or push to justify staying in a market, as the production volume was already at a good price. Why did this work well? You can make partnerships that weren’t possible otherwise. It derails the “ah, you need food and shelter first” argument because you can change the “why do we need a laptop” argument to “why do we need education?” at which point education leads to increased societal conditions. Why laptops? Tablets are more consumer-focused than construction-focused. (Certainly true of how I use my tech.)

(When we launched the first of the Digital Technologies MOOCs, the deal we agreed upon with Google was that it wasn’t a profit-making venture at all. It never will be. Neither we nor Google make money from the support of teachers across Australia so we can have all of the same advantages as they mention above: open partnerships, no profit motive, working for the common good as a mission of learning and collegial respect. Highly recommended approach, if someone is paying you enough to make your rent and eat. The secret truth of academia is that they give you money to keep you housed, clothed and fed while you think. )

Nicholas told a story of kids changing from being scared or bored of school to using an approach that brings kids flocking in. A great measure of success.

Now, onto Education without curricula, starting by talking public versus private. This is a sensitive subject for many people. The biggest problem for public education in many cases is the private educational system, dragging out caring educators to a closed system. Remember Finland? There are no public schools and their educational system is astoundingly good. Nicholas’ points were:

- Public versus private

- Age segregation

- Stop testing. (Yay!)

The public sector is losing the imperative of the civic responsibility for education. Nicholas thinks it doesn’t make sense that we still segregate by ages as a hard limit. He thinks we should get away from breaking it into age groups, as it doesn’t clearly reflect where students are at.

Oh, testing. Nicholas correctly labelled the parental complicity in the production of the testing pressure cooker. “You have to get good grades if you’re going to Princeton!” The testing mania is dominating institutions and we do a lot of testing to measure and rank children, rather than determining competency. Oh, so much here. Testing leads to destructive behaviour.

So where do new ideas come from? (A more positive note.) Nicholas is interested in Higher Ed as sources of new ideas. Why does HE exist, especially if we can do things remotely or off campus? What is the role of the Uni in the future? Ha! Apparently, when Nicholas started the MIT media lab, he was accused of starting a sissy lab with artists and soft science… oh dear, that’s about as wrong as someone can get. His use of creatives was seen as soft when, of course, using creative users addressed two issues to drive new ideas: a creative approach to thinking and consulting with the people who used the technology. Who really invented photography? Photographers. Three points from this section.

- Children: our most precious natural resource

- Incrementalism is the enemy of creativity

- Brain drain

On the brain drain, we lose many, many students to other places. Uni are a place to solve huge problems rather than small, profit-oriented problems. The entrepreneurial focus leads to small problem solution, which is sucking a lot of big thinking out of the system. The app model is leading to a human resource deficit because the start-up phenomenon is ripping away some of our best problem solvers.

Finally, to connectivity as a human right. This is something that Nicholas is very, very passionate about. Not content. Not laptops. Being connected. Learning, education, and access to these, from early in life to the end of life – connectivity is the end of isolation. Isolation comes in many forms and can be physical, geographical and social. Here are Nicholas’ points:

- The end of isolation.

- Nationalism is a disease (oh, so much yes.) Nations are the wrong taxonomy for the world.

- Fried eggs and omelettes.

Fried eggs and omelettes? In general, the world had crisp boundaries, yolk versus white. At work/at home. At school/not at school. We are moving to a more blended, less dichotomous approach because we are mixing our lives together. This is both bad (you’re getting work in my homelife) and good (I’m getting learning in my day).

Can we drop kids into a reading environment and hope that they’ll learn to read? Reading is only 3,500 years old, versus our language skills, so it has to be learned. But do we have to do it the way that we did it? Hmm. Interesting questions. This is where the tablets were dropped into illiterate villages without any support. (Does this require a seed autodidact in the group? There’s a lot to unpack it.) Nicholas says he made a huge mistake in naming the village in Ethiopia which has corrupted the experiment but at least the kids are getting to give press conferences!

Another massive amount of interesting information – sadly, no question time!

EduTECH AU 2015, Day 1, Higher Ed Leaders, “Revolutionising the Student Experience: Thinking Boldly” #edutechau

Posted: June 2, 2015 Filed under: Education | Tags: AI, artificial intelligence, blogging, collaboration, community, data visualisation, deakin, design, education, educational research, edutech2015, edutecha, edutechau, ethics, higher education, learning, learning analytics, machine intelligence, measurement, principles of design, resources, student perspective, students, teaching, thinking, tools, training, watson Leave a commentLucy Schulz, Deakin University, came to speak about initiatives in place at Deakin, including the IBM Watson initiative, which is currently a world-first for a University. How can a University collaborate to achieve success on a project in a short time? (Lucy thinks that this is the more interesting question. It’s not about the tool, it’s how they got there.)

Some brief facts on Deakin: 50,000 students, 11,000 of whom are on-line. Deakin’s question: how can we make the on-line experience as good if not better than the face-to-face and how can on-line make face-to-face better?

Part of Deakin’s Student Experience focus was on delighting the student. I really like this. I made a comment recently that our learning technology design should be “Everything we do is valuable” and I realise now I should have added “and delightful!” The second part of the student strategy is for Deakin to be at the digital frontier, pushing on the leading edge. This includes understanding the drivers of change in the digital sphere: cultural, technological and social.

(An aside: I’m not a big fan of the term disruption. Disruption makes room for something but I’d rather talk about the something than the clearing. Personal bug, feel free to ignore.)

The Deakin Student Journey has a vision to bring students into the centre of Uni thinking, every level and facet – students can be successful and feel supported in everything that they do at Deakin. There is a Deakin personality, an aspirational set of “Brave, Stylish, Accessible, Inspiring and Savvy”.

Not feeling this as much but it’s hard to get a feel for something like this in 30 seconds so moving on.

What do students want in their learning? Easy to find and to use, it works and it’s personalised.

So, on to IBM’s Watson, the machine that won Jeopardy, thus reducing the set of games that humans can win against machines to Thumb Wars and Go. We then saw a video on Watson featuring a lot of keen students who coincidentally had a lot of nice things to say about Deakin and Watson. (Remember, I warned you earlier, I have a bit of a thing about shiny videos but ignore me, I’m a curmudgeon.)

The Watson software is embedded in a student portal that all students can access, which has required a great deal of investigation into how students communicate, structurally and semantically. This forms the questions and guides the answer. I was waiting to see how Watson was being used and it appears to be acting as a student advisor to improve student experience. (Need to look into this more once day is over.)

Ah, yes, it’s on a student home page where they can ask Watson questions about things of importance to students. It doesn’t appear that they are actually programming the underlying system. (I’m a Computer Scientist in a faculty of Engineering, I always want to get my hands metaphorically dirty, or as dirty as you can get with 0s and 1s.) From looking at the demoed screens, one of the shiny student descriptions of Watson as “Siri plus Google” looks very apt.

Oh, it has cheekiness built in. How delightful. (I have a boundless capacity for whimsy and play but an inbuilt resistance to forced humour and mugging, which is regrettably all that the machines are capable of at the moment. I should confess Siri also rubs me the wrong way when it tries to be funny as I have a good memory and the patterns are obvious after a while. I grew up making ELIZA say stupid things – don’t judge me! 🙂 )

Watson has answered 26,000 questions since February, with an 80% accuracy for answers. The most common questions change according to time of semester, which is a nice confirmation of existing data. Watson is still being trained, with two more releases planned for this year and then another project launched around course and career advisors.

What they’ve learned – three things!

- Student voice is essential and you have to understand it.

- Have to take advantage of collaboration and interdependencies with other Deakin initiatives.

- Gained a new perspective on developing and publishing content for students. Short. Clear. Concise.

The challenges of revolution? (Oh, they’re always there.) Trying to prevent students falling through the cracks and make sure that this tool help students feel valued and stay in contact. The introduction of new technologies have to be recognised in terms of what they change and what they improve.

Collaboration and engagement with your University and student community are essential!

Thanks for a great talk, Lucy. Be interesting to see what happens with Watson in the next generations.

EduTech Australia 2015, Day 1, Session 2, Higher Education IT Leaders #edutechau

Posted: June 2, 2015 Filed under: Education | Tags: blogging, community, customer-centric, design, digital technologies, education, educational research, edutech2015, edutechau, higher education, learning technologies, mark gregory, measurement, principles of design, resources, technology services, the university of adelaide, tools, University of Adelaide Leave a commentI jumped streams (GASP) to attend Mark Gregory’s talk on “Building customer-centric IT services.” Mark is the CIO from my home institutions, the University of Adelaide, and I work closely with him on a couple of projects. There’s an old saying that if you really want to know what’s going on in your IT branch, go and watch the CIO give a presentation away from home, which may also explain why I’m here. (Yes, I’m a dreadful cynic.)

Seven years ago, we had the worst customer-centric IT ratings in Australia and New Zealand, now we have some of the highest. That’s pretty impressive and, for what it’s worth, it reflects my experiences inside the institution.

Mark showed a picture of the ENIAC team, noting that the picture had been mocked up a bit, as additional men had been staged in the picture, which was a bit strange even the ENIAC team were six women to one man. (Yes, this has been going on for a long time.) His point was that we’ve come a long way from he computer attended by acolytes as a central resource to computers everywhere that everyone can access and we have specifically chosen. Technology is now something that you choose rather than what you put up with.

For Adelaide, on a typical day we see about 56,000 devices on the campus networks, only a quarter of which are University-provided. Over time, the customer requirement for centralised skills is shrinking as their own skills and the availability of outside (often cloud-based) resources increase. In 2020-2025, fewer and fewer of people on campus will need centralised IT.

Is ERP important? Mark thinks ‘Meh’ because it’s being skinned with Apps and websites, the actual ERP running in the background. What about networks? Well, everyone’s got them. What about security? That’s more of an imposition and it’s used by design issues. Security requirements are not a crowd pleaser.

So how will our IT services change over time?

A lot of us are moving from SOE to BYOD but this means saying farewell to the Standard Operating Environment (SOE). It’s really not desirable to be in this role, but it also drives a new financial model. We see 56,000 devices for 25,000 people – the mobility ship has sailed. How will we deal with it?

We’re moving from a portal model to an app model. The one stop shop is going and the new model is the build-it-yourself app store model where every device is effectively customised. The new user will not hang out in the portal environment.

Mark thinks we really, really need to increase the level of Self Help. A year ago, he put up 16 pages of PDFs and discovered that, over the year, 35,000 people went through self help compared to 70,000 on traditional help-desk. (I question that the average person in the street knows that an IP address given most of what I see in movies. 😉 )

The newer operating systems require less help but student self-help use is outnumbered 5 times by staff usage. Students go somewhere else to get help. Interesting. Our approaches to VPN have to change – it’s not like your bank requires one. Our approaches to support have to change – students and staff work 24×7, so why were we only supporting them 8-6? Adelaide now has a contract service outside of those hours to take the 100 important calls that would have been terrible had they not been fixed.

Mark thinks that IDM and access need to be fixed, it makes up 24% of their reported problems: password broken, I can’t get on and so on.

Security used to be on the device that the Uni owned. This has changed. Now it has to be data security, as you can’t guarantee that you own the device. Virtual desktops and virtual apps can offer data containerisation among their other benefits.

Let’s change the thinking from setting a perimeter to the person themselves. The boundaries are shifting and, let’s be honest, the inside of any network with 30,000 people is going to be swampy anyway.

Project management thinking is shifting from traditional to agile, which gets closer to the customer on shorter and smaller projects. But you have to change how you think about projects.

A lot of tools used to get built that worked with data but now people want to make this part of their decision infrastructure. Data quality is now very important.

The massive shift is from “provide and control” to “advise and enable”. (Sorry, auditors.) Another massive shift is from automation of a process that existed to support a business to help in designing the processes that will support the business. This is a driver back into policy space. (Sorry, central admin branch.) At the same time, Mark believes that they’re transitioning from a functional approach to a customer-centric focus. A common services layer will serve the student, L&T, research and admin groups but those common services may not be developed or even hosted inside the institution.

It’s not a surprise to anyone who’s been following what Mark has been doing, but he believes that the role is shifting from IT operations to University strategy.

Some customers are going to struggle. Some people will always need help. But what about those highly capable individuals who could help you? This is where innovation and co-creation can take place, with specific people across the University.

Mark wants Uni IT organisations to disrupt themselves. (The Go8 are rather conservative and are not prone to discussing disruption, let alone disrupting.)

Basically, if customers can do it, make themselves happy and get what they want working, why are you in their way? If they can do it themselves, then get out of the way except for those things where you add value and make the experience better. We’re helping people who are desperate but we’re not putting as much effort into the innovators and more radical thinkers. Mark’s belief is that investing more effort into collaboration, co-creation and innovation is the way to go.

It looks risky but is it? What happens if you put technology out there? How do you get things happening?

Mark wants us to move beyond Service Level Agreements, which he describes as the bottom bar. No great athlete performs at the top level because of an SLA. This requires a move to meaningful metrics. (Very similar to student assessment, of course! Same problem!) Just because we measure something doesn’t make it meaningful!

We tended to hire skills to provide IT support. Mark believes that we should now be hiring attributes: leaders, drivers, innovators. The customer wants to get somewhere. How can we help them?

Lots to think about – thanks, Mark!

EduTech Australia 2015, Day 1, Session 1, Higher Education Leaders @jselingo #edutechau

Posted: June 2, 2015 Filed under: Education, Opinion | Tags: advocacy, blogging, education, educational research, edutech2015, edutechau, higher education, Jeffrey Selingo, learning, students, teaching, teaching approaches, thinking, tools, workload Leave a commentEmeritus Professor Steven Schwartz, AM, opened the Higher Ed leaders session, following a very punchy video on how digital is doing “zoom” and “rock and roll” things. (I’m a bit jaded about “tech wow” videos but this one was pretty good. It reinforced the fact that over 60% of all web browsing is carried out on mobile devices, which should be a nudge to all of us designing for the web.)

There will be roughly 5,000 participants in the totally monstrous Brisbane Convention Centre. There are many people here that I know but I’m beginning to doubt whether I’m going to see many of them unless they’re speaking – there’s a mass of educational humanity here, people!

The opening talk was “The Universities of tomorrow, the future of anytime and anywhere learning”, presented by Jeffrey Selingo. Jeff writes books, “College Unbound” among others, and is regular contributor to the Washington Post and the Chronicle. (I live for the day I can put “Education Visionary” on my slides with even a shred of this credibility. As a note, remarks in parentheses are probably my editorial comments.)

(I’ve linked to Jeff on Twitter. Please correct me on anything, Jeff!)

Jeff sought out to explore the future of higher learning, taking time out from editing the Chronicle. He wanted to tell the story of higher ed for the coming decade, for those parents and students heading towards it now, rather than being in it now. Jeff approached it as a report, rather than an academic paper, and is very open about the fact that he’s not conducting research. “In journalism, if you have three anecdotes, you have a trend.”

(I’m tempted to claim phenomenography but I know you’ll howl me down. And rightly so!)

Higher Ed is something that, now, you encounter once in our lives and then move on. But the growth in knowledge and ongoing explosion of new information doesn’t match that model. Our Higher Ed model is based on an older tradition and and older informational model.

(This is great news for me, I’m a strong advocate of an integrated and lifelong Higher Ed experience)

(Slides for this talk available at http://jeffselingo.com/conference

Be warned, you have to sign up for a newsletter to get the slides.)

Jeff then talked about his start, in one of the initial US college rankings, before we all ranked each other into the ground. The ‘prestige race’ as he refers to it. Every university around the world wanted to move up the ladder. (Except for the ones on the top, kicking at the rungs below, no doubt.)

“Prestige is to higher education as profit is to corporations.”

According to Caroline Hoxby, Higher Ed student flow has increased as students move around the world. Students who can move to different Universities, now tend to do so and they can exercise choices around the world. This leads to predictions like “the bottom 25% of Unis will go out of business or merge” (Clay Christensen) – Jeff disagrees with this although he thinks change is inevitable.

We have a model of new, technologically innovative and agile companies destroy the old leaders. Netflix ate Blockbuster. Amazon ate Borders. Apple ate… well, everybody… but let’s say Tower Records, shall we? Jeff noted that journalism’s upheaval has been phenomenal, despite the view of journalism as a ‘public trust’. People didn’t want to believe what was going to happen to their industry.

Jeff believes that students are going to drive the change. He believes that students are often labelled as “Traditional” (ex-school, 18-22, direct entry) and “non-Traditional” (adult learners, didn’t enter directly.) But what this doesn’t capture is the mindset or motivation of students to go to college. (MOOC motivation issues, anyone?)

What do students want to get out of their degree?

(Don’t ask difficult questions like that, Jeff! It is, of course, a great question and one we try to ask but I’m not sure we always listen.)

Why are you going? What do you want? What do you want your degree to look like? Jeff asked and got six ‘buckets’ of students in two groups, split across the trad/non-trad age groups.

Group 1 are the younger group and they break down into.

- Young Academics (24%) – the trad high-performing students who have mastered the earlier education systems and usually have a privileged background

- Coming of Age (11%) – Don’t quite know what they want from Uni but they were going to college because it was the place to go to become an adult. This is getting them ready to go to the next step, the work force.

- Career Starters (18%) – Students who see the Uni as a means to the end, credentialing for the job that they want. Get through Uni as quickly as possible.

Group 2 are older:

- Career Accelerators (21%) – Older students who are looking to get new credentials to advance themselves in their current field.

- Industry Switchers – Credential changers to move to a new industry.

- Adult Wanderers – needed a degree because that was what the market told them but they weren’t sure why

(Apologies for losing some of the stats, the room’s quite full and some people had to walk past me.)

But that’s what students are doing – what skills are required out there from the employers?

- Written and Oral communication

- Managing multiple priorities

- Collaboration

- Problem solving

People used to go to college for a broad knowledge base and then have that honed by an employer or graduate school to focus them into a discipline. Now, both of these are expected at the Undergrad level, which is fascinating given that we don’t have extra years to add to the degree. But we’re not preparing students better to enter college, nor do we have the space for experiential learning.

Expectations are greater than ever but are we delivering?

When do we need higher education? Well, it used to be “education” then “employment” then “retirement”. The new model, today, (from Georgetown, Tony Carnevale), we have “education”, then “learning and earning”, then “full-time work and on-the-job training”, “transition to retirement” and, finally, “full retirement”. Students are finally focusing on their career at around 30, after leaving the previous learning phases. This is, Jeff believes, where we are not playing an important role for students in their 20s, which is not helping them in their failure to launch.

Jeff was wondering how different life would be for the future, especially given how much longer we are going to be living. How does that Uni experience of “once in our lives, in one physical place” fit in, if people may switch jobs much more frequently over a longer life? The average person apparently switches jobs every four years – no wonder most of the software systems I use are so bad!

Je”s “College Unbound” future is student-driven, student-centred, and not a box that is entered at 18 and existed 4 years later, it’s a platform for life-long learning.

“The illiterate will be those who cannot learn, unlearn and relearn” – Alvin Toffler

Jeff doesn’t think that there will be one simple path to the future. Our single playing field competition of institutions has made us highly similar in the higher ed sector. How can we personalise pathways to the different groups of students that we saw above? Predictive analytics are important here – technology is vital. Good future education will be adaptive and experiential, combining the trad classroom with online systems. apprenticeships and, overall, removing the requirement to reside at or near your college.

Jeff talked about some new models, starting with the Swirl, the unbundled degree across different institutions, traditional snd not. Multiple universities, multiple experiences = degree.

Then there’s mixing course types, mixing face-to-face with hybrid and online to accelerate their speed of graduation. (There is a strong philosophical point on this that I hope to get back to later: why are we racing through University?)

Finally, competency-based learning allowed a learner to have class lengths from 2 weeks to 14 weeks, based on what she already knew. (I am a really serious advocate of this approach. I advocated to switch our entire first year for Engineering to a competency based approach but I’ll write more about that later on. And, no, we didn’t do it but at least we thought about it.)

In the mix are smaller chunks of information and just-in-time learning. Anyone who has used YouTube for a Photoshop tutorial has had a positive (well, as positive as it can be) experience with this. Why can’t we do this with any number of higher ed courses?

A note on the Stanford 2025 Design School exercise: the open loop education. Accepted to Stanford would give you access to 6 years of education that you would be able to use at any point in your life. Take two years, go out and work a bit, come back. Why isn’t the University at the centre of our lifelong involvement with learning?

The distance between producer and consumer is shrinking, whether it’s online stores or 3-D printing. Example given was MarginalRevolutionUniversity, a homegrown University, developed by a former George Mason academic.

As aways, the MOOC dropout rate was raised. Yes, only 10% complete, but Jeff’s interviews confirm what we already know, most of those students had no intention of completing. They didn’t think of the MOOC course as a course or as part of a degree, they were dipping in to get what they needed, when they needed it. Just like those YouTube Photoshop tutorials.

The difficult question is how certify this. And… here are badges again, part of certification of learning and the challenge is how we use them.

Jeff think that there are still benefits for residential experience, although assisted and augmented with technology:

- Faculty mentoring

- Undergraduate research (team work, open problems)

- Be creative. Take Risks. Learn how to fail.

- Cross-cultural experience.

Of course, not all of this is available to everyone. And what is the return on investment for this? LinkedIn finally has enough data that we can start to answer that question. (People will tell LinkedIn things that they won’t tell other people, apparently.) This may change the ranking game because rankings can now be conducted on outputs rather than inputs. Watch this space?

The world is changing. What does Jeff think? Ranking is going to change and we need to be able to prove our value. We have to move beyond isolated disciplines. Skill certification is going to get harder but the overall result should be better. University is for life, not just for three years. This will require us to build deep academic alliances that go beyond our traditional boxes.

Ok, prepping for the next talk!

Think. Create. Code. Vis! (@edXOnline, @UniofAdelaide, @cserAdelaide, @code101x, #code101x)

Posted: April 30, 2015 Filed under: Education, Opinion | Tags: #code101x, advocacy, blogging, collaboration, community, curriculum, data visualisation, education, educational problem, educational research, edx, higher education, learning, measurement, MOOC, moocs, reflection, resources, teaching, teaching approaches, thinking, tools, universal principles of design Leave a commentI just posted about the massive growth in our new on-line introductory programming course but let’s look at the numbers so we can work out what’s going on and, maybe, what led to that level of success. (Spoilers: central support from EdX helped a huge amount.) So let’s get to the data!

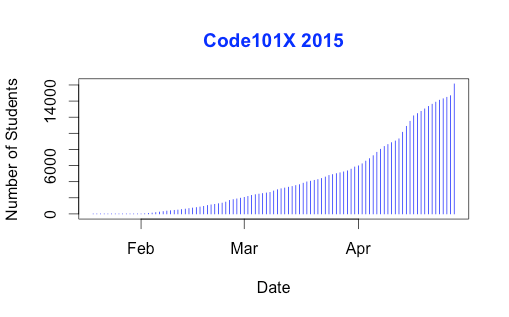

I love visualised data so let’s look at the growth in enrolments over time – this is really simple graphical stuff as we’re spending time getting ready for the course at the moment! We’ve had great support from the EdX team through mail-outs and Twitter and you can see these in the ‘jumps’ in the data that occurred at the beginning, halfway through April and again at the end. Or can you?

Hmm, this is a large number, so it’s not all that easy to see the detail at the end. Let’s zoom in and change the layout of the data over to steps so we can things more easily. (It’s worth noting that I’m using the free R statistical package to do all of this. I can change one line in my R program and regenerate all of my graphs and check my analysis. When you can program, you can really save time on things like this by using tools like R.)

Now you can see where that increase started and then the big jump around the time that e-mail advertising started, circled. That large spike at the end is around 1500 students, which means that we jumped 10% in a day.

When we started looking at this data, we wanted to get a feeling for how many students we might get. This is another common use of analysis – trying to work out what is going to happen based on what has already happened.

As a quick overview, we tried to predict the future based on three different assumptions:

- that the growth from day to day would be roughly the same, which is assuming linear growth.

- that the growth would increase more quickly, with the amount of increase doubling every day (this isn’t the same as the total number of students doubling every day).

- that the growth would increase even more quickly than that, although not as quickly as if the number of students were doubling every day.

If Assumption 1 was correct, then we would expect the graph to look like a straight line, rising diagonally. It’s not. (As it is, this model predicted that we would only get 11,780 students. We crossed that line about 2 weeks ago.

So we know that our model must take into account the faster growth, but those leaps in the data are changes that caused by things outside of our control – EdX sending out a mail message appears to cause a jump that’s roughly 800-1,600 students, and it persists for a couple of days.

Let’s look at what the models predicted. Assumption 2 predicted a final student number around 15,680. Uhh. No. Assumption 3 predicted a final student number around 17,000, with an upper bound of 17,730.

Hmm. Interesting. We’ve just hit 17,571 so it looks like all of our measures need to take into account the “EdX” boost. But, as estimates go, Assumption 3 gave us a workable ballpark and we’ll probably use it again for the next time that we do this.

Now let’s look at demographic data. We now we have 171-172 countries (it varies a little) but how are we going for participation across gender, age and degree status? Giving this information to EdX is totally voluntary but, as long as we take that into account, we make some interesting discoveries.

Our median student age is 25, with roughly 40% under 25 and roughly 40% from 26 to 40. That means roughly 20% are 41 or over. (It’s not surprising that the graph sits to one side like that. If the left tail was the same size as the right tail, we’d be dealing with people who were -50.)

The gender data is a bit harder to display because we have four categories: male, female, other and not saying. In terms of female representation, we have 34% of students who have defined their gender as female. If we look at the declared male numbers, we see that 58% of students have declared themselves to be male. Taking into account all categories, this means that our female participant percentage could be as high as 40% but is at least 34%. That’s much higher than usual participation rates in face-to-face Computer Science and is really good news in terms of getting programming knowledge out there.

We’re currently analysing our growth by all of these groupings to work out which approach is the best for which group. Do people prefer Twitter, mail-out, community linkage or what when it comes to getting them into the course.

Anyway, lots more to think about and many more posts to come. But we’re on and going. Come and join us!

Think. Create. Code. Wow! (@edXOnline, @UniofAdelaide, @cserAdelaide, @code101x, #code101x)

Posted: April 30, 2015 Filed under: Education, Opinion | Tags: #code101x, advocacy, authenticity, blogging, community, cser digital technologies, curriculum, digital technologies, education, educational problem, educational research, ethics, higher education, Hugh Davis, learning, MOOC, moocs, on-line learning, research, Southampton, student perspective, teaching, teaching approaches, thinking, tools, University of Southampton 1 CommentThings are really exciting here because, after the success of our F-6 on-line course to support teachers for digital technologies, the Computer Science Education Research group are launching their first massive open on-line course (MOOC) through AdelaideX, the partnership between the University of Adelaide and EdX. (We’re also about to launch our new 7-8 course for teachers – watch this space!)

Our EdX course is called “Think. Create. Code.” and it’s open right now for Week 0, although the first week of real content doesn’t go live until the 30th. If you’re not already connected with us, you can also follow us on Facebook (code101x) or Twitter (@code101x), or search for the hashtag #code101x. (Yes, we like to be consistent.)

I am slightly stunned to report that, less than 24 hours before the first content starts to roll out, that we have 17,531 students enrolled, across 172 countries. Not only that, but when we look at gender breakdown, we have somewhere between 34-42% women (not everyone chooses to declare a gender). For an area that struggles with female participation, this is great news.

I’ll save the visualisation data for another post, so let’s quickly talk about the MOOC itself. We’re taking a 6 week approach, where students focus on developing artwork and animation using the Processing language, but it requires no prior knowledge and runs inside a browser. The interface that has been developed by the local Adelaide team (thank you for all of your hard work!) is outstanding and it’s really easy to make things happen.

I love this! One of the biggest obstacles to coding is having to wait until you see what happens and this can lead to frustration and bad habits. In Processing you can have a circle on the screen in a matter of seconds and you can start playing with colour in the next second. There’s a lot going on behind the screen to make it this easy but the student doesn’t need to know it and can get down to learning. Excellent!