Updated previous post: @WalidahImarisha #worldcon #sasquan

Posted: August 24, 2015 Filed under: Education, Opinion | Tags: advocacy, authenticity, blogging, collaboration, community, curriculum, design, education, educational research, equity, fantasy, higher education, research, sasquan, science fiction, worldcon Leave a commentWalidah Imarisha very generously continued the discussion of my last piece with me on Twitter and I have updated that piece to include her thoughts and to provide vital additional discussion. As always, don’t read me talking about things when you can read the words of the people who are out there fixing, changing the narrative, fighting and winning.

Thank you, Walidah!

The Only Way Forward is With No Names @iamajanibrown @WalidahImarisha #afrofuturism #worldcon #sasquan

Posted: August 23, 2015 Filed under: Education | Tags: advocacy, AfroFuturism, Anonymous review, authenticity, blogging, collaboration, community, curriculum, design, education, educational research, equity, fantasy, higher education, research, sasquan, science fiction, SF&F, worldcon 5 CommentsEdit: Walidah Imarisha and I had a discussion in Twitter after I released this piece and I wanted to add her thoughts and part of our discussion. I’ve added it to the end so that you’ll have context but I mention it here because her thoughts are the ones that you must read before you leave this piece. Never listen to me when you can be listening to the people who are living this and fighting it.

I’m currently at the World Science Fiction Convention in Spokane, Washington state. As always, my focus is education and (no surprise to long term readers) equity. I’ve had the opportunity to attend some amazing panels. One was on the experience of women in art, publishing and game production of female characters for video gaming. Others were discussing issues such as non-white presence in fiction (#AfroFuturism with Professor Ajani Brown) and a long discussion of the changes between the Marvel Universe in film and comic form, as well as how we can use Science Fiction & Fantasy in the classroom to address social issues without having to directly engage the (often depressing) news sources. Both the latter panels were excellent and, in the Marvel one, Tom Smith, Annalee Flower Horne, Cassandra Rose Clarke, and Professor Brown, there was a lot of discussion of both the new Afro-American characters in movies and TV (Deathlok, Storm and Falcon) as well as how much they had changed from the comics.

I’m going to discuss what I saw and lead towards my point: that all assessment of work for its publishing potential should, where it is possible and sensible, be carried out blind, without knowledge of who wrote it.

I’ve written on this before, both here (where I argue that current publishing may not be doing what we want for the long term benefit of the community and the publishers themselves) and here, where we identify that systematic biases against people who are not western men is rampant and apparently almost inescapable as long as we can see a female name. Very recently, this Jezebel article identified that changing the author’s name on a manuscript, from female to male, not only included response rate and reduced time waiting, it changed the type of feedback given. The woman’s characters were “feisty”, the man’s weren’t. Same characters. It doesn’t matter if you think you’re being sexist or not, it doesn’t even matter (from the PNAS study in the second link) if you’re a man or a woman, the presence of a female name changes the level of respect attached to a work and also the level of reward/appreciation offered an assessment process. There are similar works that clearly identify that this problem is even worse for People of Colour. (Look up Intersectionality if you don’t know what I’m talking about.) I’m not saying that all of these people are trying to discriminate but the evidence we have says that social conditioning that leads to sexism is powerful and dominating.

Now let’s get back to the panels. The first panel “Female Characters in Video Games” with Andrea Stewart, Maurine Starkey, Annalee Flower Horne, Lauren Roy and Tanglwyst de Holloway. While discussing the growing market for female characters, the panel identified the ongoing problems and discrimination against women in the industry. 22% of professionals in the field are women, which sounds awful until you realise that this figure was 11% in 2009. However, Maurine had had her artwork recognised as being “great” when someone thought her work was a mans and “oh, drawn like a woman” when the true owner was revealed. And this is someone being explicit. The message of the panel was very positive: things were getting better. However, it was obvious that knowing someone was a woman changed how people valued their work or even how their activities were described. “Casual gaming” is often a term that describes what women do; if women take up a gaming platform (and they are a huge portion of the market) then it often gets labelled “casual gaming”.

So, point 1, assessing work at a professional level is apparently hard to do objectively when we know the gender of people. Moving on.

The first panel on Friday dealt with AfroFuturism, which looks at the long-standing philosophical and artistic expression of alternative realities relating to people of African Descent. This can be traced to the Egyptian origins of mystic and astrological architecture and religions, through tribal dances and mask ceremonies of other parts of Africa, to the P.Funk mothership and science-fiction works published in the middle of vinyl albums. There are strong notions of carving out or refining identity in order to break oppressive narratives and re-establish agency. AfroFuturism looks into creating new futures and narratives, also allowing for reinvention to escape the past, which is a powerful tool for liberation. People can be put into boxes and they want to break out to liberate themselves and, too often, if we know that someone can be put into a box then we have a nasty tendency (implicit cognitive bias) to jam them back in. No wonder, AfroFuturism is seen as a powerful force because it is an assault on the whole mean, racist narrative that does things like call groups of white people “protesters” or “concerned citizens”, and groups of black people “rioters”.

(If you follow me on Twitter, you’ve seen a fair bit of this. If you’re not following me on Twitter, @nickfalkner is the way to go.)

So point 2, if we know someone’s race, then we are more likely to enforce a narrative that is stereotypical and oppressive when we are outside of their culture. Writers inside the culture can write to liberate and to redefine identity and this probably means we need to see more of this.

I want to focus on the final panel, “Saving the World through Science Fiction: SF in the Classroom”, with Ben Cartwright, Ajani Brown (again!), Walidah Imarisha and Charlotte Lewis Brown. There are many issues facing our students on a day-to-day basis and it can be very hard to engage with some of them because it is confronting to have to address your own biases when you talk about the real world. But you can talk about racism with aliens, xenophobia with a planetary invasion, the horrors of war with apocalyptic fiction… and it’s not the nightly news. People can confront their biases without confronting them. That’s a very powerful technique for changing the world. It’s awesome.

Point 3, then, is that narratives are important and, with careful framing, we can discuss very complicated things and get away from the sheer weight of biases and reframe a discussion to talk about difficult things, without having to resort to violence or conflict. This reinforces Point 2, that we need more stories from other viewpoints to allow us to think about important issues.

We are a narrative and a mythic species: storytelling allows us to explain our universe. Storytelling defines our universe, whether it’s religion, notions of family or sense of state.

What I take from all of these panels is that many of the stories that we want to be reading, that are necessary for the healing and strengthening of our society, should be coming from groups who are traditionally not proportionally represented: women, People of Colour, Women of Colour, basically anyone who isn’t recognised as a white man in the Western Tradition. This isn’t to say that everything has to be one form but, instead, that we should be putting systems in place to get the best stories from as wide a range as possible, in order to let SF&F educate, change and grow the world. This doesn’t even touch on the Matthew Effect, where we are more likely to positively value a work if we have an existing positive relationship with the author, even if said work is not actually very good.

And this is why, with all of the evidence we have with cognitive biases changing the way people think about work based on the name, that the most likely approach to improve the range of stories that we will end up publishing is to judge as many works as we can without knowing who wrote it. If we wanted to take it further, we could even ask people to briefly explain why they did or didn’t like it. The comments on the Jezebel author’s book make it clear that, with those comments, we can clearly identify a bias in play. “It’s not for us” and things like that are not sufficiently transparent for us to see if the system is working. (Apologies to the hard-working editors out there, I know this is a big demand. Anonymity is a great start. 🙂 )

Now some books/works, you have to know who wrote it; my textbook, for example, depends upon my academic credentials and my published work, hence my identify is a part of the validity of academic work. But, for short fiction, for books? Perhaps it’s time to look at all of the evidence and to look at all of the efforts to widen the range of voices we hear and consider a commitment to anonymous review so that SF&F will be a powerful force for thought and change in the decades to come.

Thank you to all of the amazing panellists. You made everyone think and sent out powerful and positive messages. Thank you, so much!

Edit: As mentioned above, Walidah and I had a discussion that extended from this on Twitter. Walidah’s point was about changing the system so that we no longer have to hide identity to eliminate bias and I totally agree with this. Our goal has to be to create a space where bias no longer exists, where the assumption that the hierarchical dominance is white, cis, straight and male is no longer the default. Also, while SF&F is a great tool, it does not replace having the necessary and actual conversations about oppression. Our goal should never be to erase people of colour and replace it with aliens and dwarves just because white people don’t want to talk about race. While narrative engineering can work, many people do not transfer the knowledge from analogy to reality and this is why these authentic discussions of real situations must also exist. When we sit purely in analog, we risk reinforcing inequality if we don’t tie it back down to Earth.

I am still trying to attack a biased system to widen the narrative to allow more space for other voices but, as Walidah notes, this is catering to the privileged, rather than empowering the oppressed to speak their stories. And, of course, talking about oppression leads those on top of the hierarchy to assume you are oppressed. Walidah mentioned Katherine Burdekin & Swastika Nights as part of this. Our goal must be to remove bias. What I spoke about above is one way but it is very much born of the privileged and we cannot lose sight of the necessity of empowerment and a constant commitment to ensuring the visibility of other voices and hearing the stories of the oppressed from them, not passed through white academics like me.

Seriously, if you can read me OR someone else who has a more authentic connection? Please read that someone else.

Walidah’s recent work includes, with adrienne maree brown, editing the book of 20 short stories I have winging its way to me as we speak, “Octavia’s Brood: Science Fiction Stories from Social Justice Movements” and I am so grateful that she took the time to respond to this post and help me (I hope) to make it stronger.

Designing a MOOC: how far did it reach? #csed

Posted: June 10, 2015 Filed under: Education, Opinion | Tags: advocacy, authenticity, blogging, collaboration, community, computer science education, constructivist, contributing student pedagogy, curriculum, data visualisation, design, education, educational problem, educational research, ethics, feedback, higher education, in the student's head, learning, measurement, MOOC, moocs, principles of design, reflection, resources, students, teaching, teaching approaches, thinking, tools Leave a commentMark Guzdial posted over on his blog on “Moving Beyond MOOCS: Could we move to understanding learning and teaching?” and discusses aspects (that still linger) of MOOC hype. (I’ve spoken about MOOCs done badly before, as well as recording the thoughts of people like Hugh Davis from Southampton.) One of Mark’s paragraphs reads:

“The value of being in the front row of a class is that you talk with the teacher. Getting physically closer to the lecturer doesn’t improve learning. Engagement improves learning. A MOOC puts everyone at the back of the class, listening only and doing the homework”

My reply to this was:

“You can probably guess that I have two responses here, the first is that the front row is not available to many in the real world in the first place, with the second being that, for far too many people, any seat in the classroom is better than none.

But I am involved in a, for us, large MOOC so my responses have to be regarded in that light. Thanks for the post!”

Mark, of course, called my bluff and responded with:

“Nick, I know that you know the literature in this space, and care about design and assessment. Can you say something about how you designed your MOOC to reach those who would not otherwise get access to formal educational opportunities? And since your MOOC has started, do you know yet if you achieved that goal — are you reaching people who would not otherwise get access?”

So here is that response. Thanks for the nudge, Mark! The answer is a bit long but please bear with me. We will be posting a longer summary after the course is completed, in a month or so. Consider this the unedited taster. I’m putting this here, early, prior to the detailed statistical work, so you can see where we are. All the numbers below are fresh off the system, to drive discussion and answering Mark’s question at, pretty much, a conceptual level.

First up, as some background for everyone, the MOOC team I’m working with is the University of Adelaide‘s Computer Science Education Research group, led by A/Prof Katrina Falkner, with me (Dr Nick Falkner), Dr Rebecca Vivian, and Dr Claudia Szabo.

I’ll start by noting that we’ve been working to solve the inherent scaling issues in the front of the classroom for some time. If I had a class of 12 then there’s no problem in engaging with everyone but I keep finding myself in rooms of 100+, which forces some people to sit away from me and also limits the number of meaningful interactions I can make to individuals in one setting. While I take Mark’s point about the front of the classroom, and the associated research is pretty solid on this, we encountered an inherent problem when we identified that students were better off down the front… and yet we kept teaching to rooms with more student than front. I’ll go out on a limb and say that this is actually a moral issue that we, as a sector, have had to look at and ignore in the face of constrained resources. The nature of large spaces and people, coupled with our inability to hover, means that we can either choose to have a row of students effectively in a semi-circle facing us, or we accept that after a relatively small number of students or number of rows, we have constructed a space that is inherently divided by privilege and will lead to disengagement.

So, Katrina’s and my first foray into this space was dealing with the problem in the physical lecture spaces that we had, with the 100+ classes that we had.

Katrina and I published a paper on “contributing student pedagogy” in Computer Science Education 22 (4), 2012, to identify ways for forming valued small collaboration groups as a way to promote engagement and drive skill development. Ultimately, by reducing the class to a smaller number of clusters and making those clusters pedagogically useful, I can then bring the ‘front of the class’-like experience to every group I speak to. We have given talks and applied sessions on this, including a special session at SIGCSE, because we think it’s a useful technique that reduces the amount of ‘front privilege’ while extending the amount of ‘front benefit’. (Read the paper for actual detail – I am skimping on summary here.)

We then got involved in the support of the national Digital Technologies curriculum for primary and middle school teachers across Australia, after being invited to produce a support MOOC (really a SPOC, small, private, on-line course) by Google. The target learners were teachers who were about to teach or who were teaching into, initially, Foundation to Year 6 and thus had degrees but potentially no experience in this area. (I’ve written about this before and you can find more detail on this here, where I also thanked my previous teachers!)

The motivation of this group of learners was different from a traditional MOOC because (a) everyone had both a degree and probable employment in the sector which reduced opportunistic registration to a large extent and (b) Australian teachers are required to have a certain number of professional development (PD) hours a year. Through a number of discussions across the key groups, we had our course recognised as PD and this meant that doing our course was considered to be valuable although almost all of the teachers we spoke to were furiously keen for this information anyway and my belief is that the PD was very much ‘icing’ rather than ‘cake’. (Thank you again to all of the teachers who have spent time taking our course – we really hope it’s been useful.)

To discuss access and reach, we can measure teachers who’ve taken the course (somewhere in the low thousands) and then estimate the number of students potentially assisted and that’s when it gets a little crazy, because that’s somewhere around 30-40,000.

In his talk at CSEDU 2014, Hugh Davis identified the student groups who get involved in MOOCs as follows. The majority of people undertaking MOOCs were life-long learners (older, degreed, M/F 50/50), people seeking skills via PD, and those with poor access to Higher Ed. There is also a small group who are Uni ‘tasters’ but very, very small. (I think we can agree that tasting a MOOC is not tasting a campus-based Uni experience. Less ivy, for starters.) The three approaches to the course once inside were auditing, completing and sampling, and it’s this final one that I want to emphasise because this brings us to one of the differences of MOOCs. We are not in control of when people decide that they are satisfied with the free education that they are accessing, unlike our strong gatekeeping on traditional courses.

I am in total agreement that a MOOC is not the same as a classroom but, also, that it is not the same as a traditional course, where we define how the student will achieve their goals and how they will know when they have completed. MOOCs function far more like many people’s experience of web browsing: they hunt for what they want and stop when they have it, thus the sampling engagement pattern above.

(As an aside, does this mean that a course that is perceived as ‘all back of class’ will rapidly be abandoned because it is distasteful? This makes the student-consumer a much more powerful player in their own educational market and is potentially worth remembering.)

Knowing these different approaches, we designed the individual subjects and overall program so that it was very much up to the participant how much they chose to take and individual modules were designed to be relatively self-contained, while fitting into a well-designed overall flow that built in terms of complexity and towards more abstract concepts. Thus, we supported auditing, completing and sampling, whereas our usual face-to-face (f2f) courses only support the first two in a way that we can measure.

As Hugh notes, and we agree through growing experience, marking/progress measures at scale are very difficult, especially when automated marking is not enough or not feasible. Based on our earlier work in contributing collaboration in the class room, for the F-6 Teacher MOOC we used a strong peer-assessment model where contributions and discussions were heavily linked. Because of the nature of the cohort, geographical and year-level groups formed who then conducted additional sessions and produced shared material at a slightly terrifying rate. We took the approach that we were not telling teachers how to teach but we were helping them to develop and share materials that would assist in their teaching. This reduced potential divisions and allows us to establish a mutually respectful relationship that facilitated openness.

(It’s worth noting that the courseware is creative commons, open and free. There are people reassembling the course for their specific take on the school system as we speak. We have a national curriculum but a state-focused approach to education, with public and many independent systems. Nobody makes any money out of providing this course to teachers and the material will always be free. Thank you again to Google for their ongoing support and funding!)

Overall, in this first F-6 MOOC, we had higher than usual retention of students and higher than usual participation, for the reasons I’ve outlined above. But this material was for curriculum support for teachers of young students, all of whom were pre-programming, and it could be contained in videos and on-line sharing of materials and discussion. We were also in the MOOC sweet-spot: existing degreed learners, PD driver, and their PD requirement depended on progressive demonstration on goal achievement, which we recognised post-course with a pre-approved certificate form. (Important note: if you are doing this, clear up how the PD requirements are met and how they need to be reported back, as early on as you can. It meant that we could give people something valuable in a short time.)

The programming MOOC, Think. Create. Code on EdX, was more challenging in many regards. We knew we were in a more difficult space and would be more in what I shall refer to as ‘the land of the average MOOC consumer’. No strong focus, no PD driver, no geographically guaranteed communities. We had to think carefully about what we considered to be useful interaction with the course material. What counted as success?

To start with, we took an image-based approach (I don’t think I need to provide supporting arguments for media-driven computing!) where students would produce images and, over time, refine their coding skills to produce and understand how to produce more complex images, building towards animation. People who have not had good access to education may not understand why we would use programming in more complex systems but our goal was to make images and that is a fairly universally understood idea, with a short production timeline and very clear indication of achievement: “Does it look like a face yet?”

In terms of useful interaction, if someone wrote a single program that drew a face, for the first time – then that’s valuable. If someone looked at someone else’s code and spotted a bug (however we wish to frame this), then that’s valuable. I think that someone writing a single line of correct code, where they understand everything that they write, is something that we can all consider to be valuable. Will it get you a degree? No. Will it be useful to you in later life? Well… maybe? (I would say ‘yes’ but that is a fervent hope rather than a fact.)

So our design brief was that it should be very easy to get into programming immediately, with an active and engaged approach, and that we have the same “mostly self-contained week” approach, with lots of good peer interaction and mutual evaluation to identify areas that needed work to allow us to build our knowledge together. (You know I may as well have ‘social constructivist’ tattooed on my head so this is strongly in keeping with my principles.) We wrote all of the materials from scratch, based on a 6-week program that we debated for some time. Materials consisted of short videos, additional material as short notes, participatory activities, quizzes and (we planned for) peer assessment (more on that later). You didn’t have to have been exposed to “the lecture” or even the advanced classroom to take the course. Any exposure to short videos or a web browser would be enough familiarity to go on with.

Our goal was to encourage as much engagement as possible, taking into account the fact that any number of students over 1,000 would be very hard to support individually, even with the 5-6 staff we had to help out. But we wanted students to be able to develop quickly, share quickly and, ultimately, comment back on each other’s work quickly. From a cognitive load perspective, it was crucial to keep the number of things that weren’t relevant to the task to a minimum, as we couldn’t assume any prior familiarity. This meant no installers, no linking, no loaders, no shenanigans. Write program, press play, get picture, share to gallery, winning.

As part of this, our support team (thanks, Jill!) developed a browser-based environment for Processing.js that integrated with a course gallery. Students could save their own work easily and share it trivially. Our early indications show that a lot of students jumped in and tried to do something straight away. (Processing is really good for getting something up, fast, as we know.) We spent a lot of time testing browsers, testing software, and writing code. All of the recorded materials used that development environment (this was important as Processing.js and Processing have some differences) and all of our videos show the environment in action. Again, as little extra cognitive load as possible – no implicit requirement for abstraction or skills transfer. (The AdelaideX team worked so hard to get us over the line – I think we may have eaten some of their brains to save those of our students. Thank you again to the University for selecting us and to Katy and the amazing team.)

The actual student group, about 20,000 people over 176 countries, did not have the “built-in” motivation of the previous group although they would all have their own levels of motivation. We used ‘meet and greet’ activities to drive some group formation (which worked to a degree) and we also had a very high level of staff monitoring of key question areas (which was noted by participants as being very high for EdX courses they’d taken), everyone putting in 30-60 minutes a day on rotation. But, as noted before, the biggest trick to getting everyone engaged at the large scale is to get everyone into groups where they have someone to talk to. This was supposed to be provided by a peer evaluation system that was initially part of the assessment package.

Sadly, the peer assessment system didn’t work as we wanted it to and we were worried that it would form a disincentive, rather than a supporting community, so we switched to a forum-based discussion of the works on the EdX discussion forum. At this point, a lack of integration between our own UoA programming system and gallery and the EdX discussion system allowed too much distance – the close binding we had in the R-6 MOOC wasn’t there. We’re still working on this because everything we know and all evidence we’ve collected before tells us that this is a vital part of the puzzle.

In terms of visible output, the amount of novel and amazing art work that has been generated has blown us all away. The degree of difference is huge: armed with approximately 5 statements, the number of different pieces you can produce is surprisingly large. Add in control statements and reputation? BOOM. Every student can write something that speaks to her or him and show it to other people, encouraging creativity and facilitating engagement.

From the stats side, I don’t have access to the raw stats, so it’s hard for me to give you a statistically sound answer as to who we have or have not reached. This is one of the things with working with a pre-existing platform and, yes, it bugs me a little because I can’t plot this against that unless someone has built it into the platform. But I think I can tell you some things.

I can tell you that roughly 2,000 students attempted quiz problems in the first week of the course and that over 4,000 watched a video in the first week – no real surprises, registrations are an indicator of interest, not a commitment. During that time, 7,000 students were active in the course in some way – including just writing code, discussing it and having fun in the gallery environment. (As it happens, we appear to be plateauing at about 3,000 active students but time will tell. We have a lot of post-course analysis to do.)

It’s a mistake to focus on the “drop” rates because the MOOC model is different. We have no idea if the people who left got what they wanted or not, or why they didn’t do anything. We may never know but we’ll dig into that later.

I can also tell you that only 57% of the students currently enrolled have declared themselves explicitly to be male and that is the most likely indicator that we are reaching students who might not usually be in a programming course, because that 43% of others, of whom 33% have self-identified as women, is far higher than we ever see in classes locally. If you want evidence of reach then it begins here, as part of the provision of an environment that is, apparently, more welcoming to ‘non-men’.

We have had a number of student comments that reflect positive reach and, while these are not statistically significant, I think that this also gives you support for the idea of additional reach. Students have been asking how they can save their code beyond the course and this is a good indicator: ownership and a desire to preserve something valuable.

For student comments, however, this is my favourite.

I’m no artist. I’m no computer programmer. But with this class, I see I can be both. #processingjs (Link to student’s work) #code101x .

That’s someone for whom this course had them in the right place in the classroom. After all of this is done, we’ll go looking to see how many more we can find.

I know this is long but I hope it answered your questions. We’re looking forward to doing a detailed write-up of everything after the course closes and we can look at everything.

EduTech AU 2015, Day 2, Higher Ed Leaders, “Change and innovation in the Digital Age: the future is social, mobile and personalised.” #edutechau @timbuckteeth

Posted: June 3, 2015 Filed under: Education | Tags: advocacy, community, curriculum, design, education, educational problem, edutech2015, feedback, Generation Why, higher education, in the student's head, learning, Mayflower, Mayflower steps, measurement, Plymouth University, principles of design, reflection, resources, steve wheeler, student perspective, students, teaching, teaching approaches, technology, thinking, tools Leave a commentAnd heeere’s Steve Wheeler (@timbuckteeth)! Steve is an A/Prof of Learning Technologies at Plymouth in the UK. He and I have been at the same event before (CSEDU, Barcelona) and we seem to agree on a lot. Today’s cognitive bias warning is that I will probably agree with Steve a lot, again. I’ve already quizzed him on his talk because it looked like he was about to try and, as I understand it, what he wants to talk about is how our students can have altered expectations without necessarily becoming some sort of different species. (There are no Digital Natives. No, Prensky was wrong. Check out Helsper, 2010, from the LSE.) So, on to the talk and enough of my nonsense!

Steve claims he’s going to recap the previous speaker, but in an English accent. Ah, the Mayflower steps on the quayside in Plymouth, except that they’re not, because the real Mayflower steps are in a ladies’ loo in a pub, 100m back from the quay. The moral? What you expect to be getting is not always what you get. (Tourists think they have the real thing, locals know the truth.)

“Any sufficiently advanced technology is indistinguishable from magic” – Arthur C. Clarke.

Educational institutions are riddled with bad technology purchases where we buy something, don’t understand it, don’t support it and yet we’re stuck with it or, worse, try to teach with it when it doesn’t work.

Predicting the future is hard but, for educators, we can do it better if we look at:

- Pedagogy first

- Technology next (that fits the technology)

Steve then plugs his own book with a quote on technology not being a silver bullet.

But who will be our students? What are their expectations for the future? Common answers include: collaboration (student and staff), and more making and doing. They don’t like being talked at. Students today do not have a clear memory of the previous century, their expectations are based on the world that they are living in now, not the world that we grew up in.

Meet Student 2.0!

The average digital birth of children happens at about six months – but they can be on the Internet before they are born, via ultrasound photos. (Anyone who has tried to swipe or pinch-zoom a magazine knows why kids take to it so easily.) Students of today have tools and technology and this is what allows them to create, mash up, and reinvent materials.

What about Game Based Learning? What do children learn from playing games

Three biggest fears of teachers using technology

- How do I make this work?

- How do I avoid looking like an idiot?

- They will know more about it than I do.

Three biggest fears of students

- Bad wifi

- Spinning wheel of death

- Low battery

The laptops and devices you see in lectures are personal windows on the world, ongoing conversations and learning activities – it’s not purely inattention or anti-learning. Student questions on Twitter can be answered by people all around the world and that’s extending the learning dialogue out a long way beyond the classroom.

Voltaire said that we were products of our age. Walrick asks how we can prepare students for a future? Steve showed us a picture of him as a young boy, who had been turned off asking questions by a mocking teacher. But the last two years of his schooling were in Holland he went to the Philips flying saucer, which was a technology museum. There, he saw an early video conferencing system and that inspired him with a vision of the future.

Steve wanted to be an astronaut but his career advisor suggested he aim lower, because he wasn’t an American. The point is not that Steve wanted to be an astronaut but that he wanted to be an explorer, the role that he occupies now in education.

Steve shared a quote that education is “about teaching students not subjects” and he shared the awesome picture of ‘named quadrilaterals’. My favourite is ‘Bob. We have a very definite idea of what we want students to write as answer but we suppress creative answers and we don’t necessarily drive the approach to learning that we want.

Ignorance spreads happily by itself, we shouldn’t be helping it. Our visions of the future are too often our memories of what our time was, transferred into modern systems. Our solution spaces are restricted by our fixations on a specific way of thinking. This prevents us from breaking out of our current mindset and doing something useful.

What will the future be? It was multi-media, it was web, but where is it going? Mobile devices because the most likely web browser platform in 2013 and their share is growing.

What will our new technologies be? Thinks get smaller, faster, lighter as they mature. We have to think about solving problems in new ways.

Here’s a fire hose sip of technologies: artificial intelligence is on the way up, touch surfaces are getting better, wearables are getting smarter, we’re looking at remote presence, immersive environments, 3D printers are changing manufacturing and teaching, gestural computing, mind control of devices, actual physical implants into the body…

From Nova Spivak, we can plot information connectivity against social connectivity and we want is growth on both axes – a giant arrow point up to the top right. We don’t yet have a Web form that connects information, knowledge and people – i.e. linking intelligence and people. We’re already seeing some of this with recommenders, intelligent filtering, and sentiment tracking. (I’m still waiting for the Semantic Web to deliver, I started doing work on it in my PhD, mumble years ago.)

A possible topology is: infrastructure is distributed and virtualised, our interfaces are 3D and interactive, built onto mobile technology and using ‘intelligent’ systems underneath.

But you cannot assume that your students are all at the same level or have all of the same devices: the digital divide is as real and as damaging as any social divide. Steve alluded to the Personal Learning Networking, which you can read about in my previous blog on him.

How will teaching change? It has to move away from cutting down students into cloned templates. We want students to be self-directed, self-starting, equipped to capture information, collaborative, and oriented towards producing their own things.

Let’s get back to our roots:

- We learn by doing (Piaget, 1950)

- We learn by making (Papert, 1960)

Just because technology is making some of this doing and making easier doesn’t mean we’re making it worthless, it means that we have time to do other things. Flip the roles, not just the classroom. Let students’ be the teacher – we do learn by teaching. (Couldn’t agree more.)

Back to Papert, “The best learning takes place when students take control.” Students can reflect in blogging as they present their information a hidden audience that they are actually writing for. These physical and virtual networks grow, building their personal learning networks as they connect to more people who are connected to more people. (Steve’s a huge fan of Twitter. I’m not quite as connected as he is but that’s like saying this puddle is smaller than the North Sea.)

Some of our students are strongly connected and they do store their knowledge in groups and friendships, which really reflects how they find things out. This rolls into digital cultural capital and who our groups are.

(Then there was a steam of images at too high a speed for me to capture – go and download the slides, they’re creative commons and a lot of fun.)

Learners will need new competencies and literacies.

Always nice to hear Steve speak and, of course, I still agree with a lot of what he said. I won’t prod him for questions, though.

EduTech Australia 2015, Day 1, Session 1, Part 2, Higher Ed Leaders #edutechau

Posted: June 2, 2015 Filed under: Education, Opinion | Tags: community, curriculum, design, Diane Oblinger, differentiator, education, educational problem, educational research, edutech2015, edutechau, ethics, feedback, higher education, in the student's head, learning, measurement, resources, students, teaching, teaching approaches, thinking Leave a commentThe next talk was a video conference presentation, “Designed to Engage”, from Dr Diane Oblinger, formerly of EDUCAUSE (USA). Diane was joining us by video on the first day of retirement – that’s keen!

Today, technology is not enough, it’s about engagement. Diane believes that the student experience can be a critical differentiator in this. In many institutions, the student will be the differentiator. She asked us to consider three different things:

- What would life be like without technology? How does this change our experiences and expectations?

- Does it have to be human-or-machine? We often construct a false dichotomy of online versus face-to-face rather than thinking about them as a continuum.

- Changes in demography are causing new consumption patterns.

Consider changes in the four key areas:

- Learning

- Pathways

- Credentialing

- Alternate Models

To speak to learning, Diane wants us to think about learning for now, rather than based on our own experiences. What will happen when classic college meets online?

Diane started from the premise that higher order learning comes from complex challenges – how can we offer this to students? Well, there are game-based, high experiential activities. They’re complex, interactive, integrative, information gathering driven, team focused and failure is part of the process. They also develop tenacity (with enough scaffolding, of course). We also get, almost for free, vast quantities of data to track how students performed their solving activities, which is far more than “right” or “wrong”. Does a complex world need more of these?

The second point for learning environments is that, sometimes, massive and intensive can go hand-in-hand. The Georgia Tech Online Master of Science in Computer Science, on Udacity , with assignments, TAs and social media engagements and problem-solving. (I need to find out more about this. Paging the usual suspects.)

The second area discussed was pathways. Students lose time, track and credits when they start to make mistakes along the way and this can lead to them getting lost in the system. Cost is a huge issue in the US (and, yes, it’s a growing issue in Australia, hooray.) Can you reduce cost without reducing learning? Students are benefiting from guided pathways to success. Georgia State and their predictive analytics were mentioned again here – leading students to more successful pathways to get better outcomes for everyone. Greatly increased retention, greatly reduced wasted tuition fees.

We now have a lot more data on what students are doing – the challenge for us is how we integrate this into better decision making. (Ethics, accuracy, privacy are all things that we have to consider.)

Learning needs to not be structured around seat time and credit hours. (I feel dirty even typing that.) Our students learn how to succeed in the environments that we give them. We don’t want to train them into mindless repetition. Once again, competency based learning, strongly formative, reflecting actual knowledge, is the way to go here.

(I really wish that we’d properly investigated the CBL first year. We might have done something visionary. Now we’ll just look derivative if we do it three years from now. Oh, well, time to start my own University – Nickapedia, anyone?)

Credentials raised their ugly head again – it’s one of the things that Unis have had in the bag. What is the new approach to credentials in the digital environment? Certificates and diplomas can be integrated into your on-line identity. (Again, security, privacy, ethics are all issues here but the idea is sound.) Example given was “Degreed”, a standalone credentialing site that can work to bridge recognised credentials from provide to employer.

Alternatives to degrees are being co-created by educators and employers. (I’m not 100% sure I agree with this. I think that some employers have great intentions but, very frequently, it turns into a requirement for highly specific training that might not be what we want to provide.)

Can we reinvent an alternative model that reinvents delivery systems, business models and support models? Can a curriculum be decentralised in a centralised University? What about models like Minerva? (Jeff mentioned this as well.)

(The slides got out of whack with the speaker for a while, apologies if I missed anything.)

(I should note that I get twitchy when people set up education for-profit. We’ve seen that this is a volatile market and we have the tension over where money goes. I have the luxury of working for an entity where its money goes to itself, somehow. There are no shareholders to deal with, beyond the 24,000,000 members of the population, who derive societal and economic benefit from our contribution.)

As noted on the next slide, working learners represent a sizeable opportunity for increased economic growth and mobility. More people in college is actually a good thing. (As an aside, it always astounds me when someone suggests that people are spending too much time in education. It’s like the insult “too clever by half”, you really have to think about what you’re advocating.)

For her closing thoughts, Diane thinks:

- The boundaries of the educational system must be re-conceptualised. We can’t ignore what’s going on around us.

- The integration of digital and physical experiences are creating new ways to engage. Digital is here and it’s not going away. (Unless we totally destroy ourselves, of course, but that’s a larger problem.)

- Can we design a better future for education.

Lots to think about and, despite some technical issues, a great talk.

Think. Create. Code. Vis! (@edXOnline, @UniofAdelaide, @cserAdelaide, @code101x, #code101x)

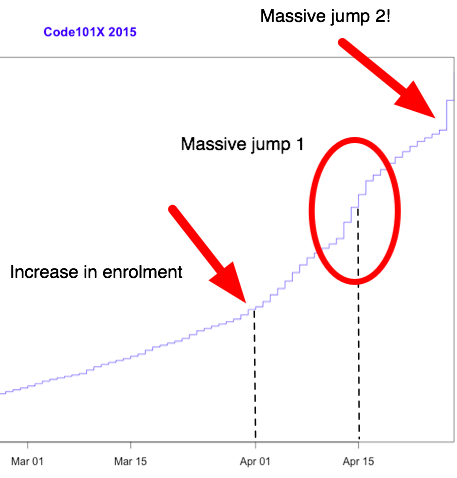

Posted: April 30, 2015 Filed under: Education, Opinion | Tags: #code101x, advocacy, blogging, collaboration, community, curriculum, data visualisation, education, educational problem, educational research, edx, higher education, learning, measurement, MOOC, moocs, reflection, resources, teaching, teaching approaches, thinking, tools, universal principles of design Leave a commentI just posted about the massive growth in our new on-line introductory programming course but let’s look at the numbers so we can work out what’s going on and, maybe, what led to that level of success. (Spoilers: central support from EdX helped a huge amount.) So let’s get to the data!

I love visualised data so let’s look at the growth in enrolments over time – this is really simple graphical stuff as we’re spending time getting ready for the course at the moment! We’ve had great support from the EdX team through mail-outs and Twitter and you can see these in the ‘jumps’ in the data that occurred at the beginning, halfway through April and again at the end. Or can you?

Hmm, this is a large number, so it’s not all that easy to see the detail at the end. Let’s zoom in and change the layout of the data over to steps so we can things more easily. (It’s worth noting that I’m using the free R statistical package to do all of this. I can change one line in my R program and regenerate all of my graphs and check my analysis. When you can program, you can really save time on things like this by using tools like R.)

Now you can see where that increase started and then the big jump around the time that e-mail advertising started, circled. That large spike at the end is around 1500 students, which means that we jumped 10% in a day.

When we started looking at this data, we wanted to get a feeling for how many students we might get. This is another common use of analysis – trying to work out what is going to happen based on what has already happened.

As a quick overview, we tried to predict the future based on three different assumptions:

- that the growth from day to day would be roughly the same, which is assuming linear growth.

- that the growth would increase more quickly, with the amount of increase doubling every day (this isn’t the same as the total number of students doubling every day).

- that the growth would increase even more quickly than that, although not as quickly as if the number of students were doubling every day.

If Assumption 1 was correct, then we would expect the graph to look like a straight line, rising diagonally. It’s not. (As it is, this model predicted that we would only get 11,780 students. We crossed that line about 2 weeks ago.

So we know that our model must take into account the faster growth, but those leaps in the data are changes that caused by things outside of our control – EdX sending out a mail message appears to cause a jump that’s roughly 800-1,600 students, and it persists for a couple of days.

Let’s look at what the models predicted. Assumption 2 predicted a final student number around 15,680. Uhh. No. Assumption 3 predicted a final student number around 17,000, with an upper bound of 17,730.

Hmm. Interesting. We’ve just hit 17,571 so it looks like all of our measures need to take into account the “EdX” boost. But, as estimates go, Assumption 3 gave us a workable ballpark and we’ll probably use it again for the next time that we do this.

Now let’s look at demographic data. We now we have 171-172 countries (it varies a little) but how are we going for participation across gender, age and degree status? Giving this information to EdX is totally voluntary but, as long as we take that into account, we make some interesting discoveries.

Our median student age is 25, with roughly 40% under 25 and roughly 40% from 26 to 40. That means roughly 20% are 41 or over. (It’s not surprising that the graph sits to one side like that. If the left tail was the same size as the right tail, we’d be dealing with people who were -50.)

The gender data is a bit harder to display because we have four categories: male, female, other and not saying. In terms of female representation, we have 34% of students who have defined their gender as female. If we look at the declared male numbers, we see that 58% of students have declared themselves to be male. Taking into account all categories, this means that our female participant percentage could be as high as 40% but is at least 34%. That’s much higher than usual participation rates in face-to-face Computer Science and is really good news in terms of getting programming knowledge out there.

We’re currently analysing our growth by all of these groupings to work out which approach is the best for which group. Do people prefer Twitter, mail-out, community linkage or what when it comes to getting them into the course.

Anyway, lots more to think about and many more posts to come. But we’re on and going. Come and join us!

Think. Create. Code. Wow! (@edXOnline, @UniofAdelaide, @cserAdelaide, @code101x, #code101x)

Posted: April 30, 2015 Filed under: Education, Opinion | Tags: #code101x, advocacy, authenticity, blogging, community, cser digital technologies, curriculum, digital technologies, education, educational problem, educational research, ethics, higher education, Hugh Davis, learning, MOOC, moocs, on-line learning, research, Southampton, student perspective, teaching, teaching approaches, thinking, tools, University of Southampton 1 CommentThings are really exciting here because, after the success of our F-6 on-line course to support teachers for digital technologies, the Computer Science Education Research group are launching their first massive open on-line course (MOOC) through AdelaideX, the partnership between the University of Adelaide and EdX. (We’re also about to launch our new 7-8 course for teachers – watch this space!)

Our EdX course is called “Think. Create. Code.” and it’s open right now for Week 0, although the first week of real content doesn’t go live until the 30th. If you’re not already connected with us, you can also follow us on Facebook (code101x) or Twitter (@code101x), or search for the hashtag #code101x. (Yes, we like to be consistent.)

I am slightly stunned to report that, less than 24 hours before the first content starts to roll out, that we have 17,531 students enrolled, across 172 countries. Not only that, but when we look at gender breakdown, we have somewhere between 34-42% women (not everyone chooses to declare a gender). For an area that struggles with female participation, this is great news.

I’ll save the visualisation data for another post, so let’s quickly talk about the MOOC itself. We’re taking a 6 week approach, where students focus on developing artwork and animation using the Processing language, but it requires no prior knowledge and runs inside a browser. The interface that has been developed by the local Adelaide team (thank you for all of your hard work!) is outstanding and it’s really easy to make things happen.

I love this! One of the biggest obstacles to coding is having to wait until you see what happens and this can lead to frustration and bad habits. In Processing you can have a circle on the screen in a matter of seconds and you can start playing with colour in the next second. There’s a lot going on behind the screen to make it this easy but the student doesn’t need to know it and can get down to learning. Excellent!

I went to a great talk at CSEDU last year, presented by Hugh Davis from Southampton, where Hugh raised some great issues about how MOOCs compared to traditional approaches. I’m pleased to say that our demography is far more widespread than what was reported there. Although the US dominates, we have large representations from India, Asia, Europe and South America, with a lot of interest from Africa. We do have a lot of students with prior degrees but we also have a lot of students who are at school or who aren’t at University yet. It looks like the demography of our programming course is much closer to the democratic promise of free on-line education but we’ll have to see how that all translates into participation and future study.

While this is an amazing start, the whole team is thinking of this as part of a project that will be going on for years, if not decades.

When it came to our teaching approach, we spent a lot of time talking (and learning from other people and our previous attempts) about the pedagogy of this course: what was our methodology going to be, how would we implement this and how would we make it the best fit for this approach? Hugh raised questions about the requirement for pedagogical innovation and we think we’ve addressed this here through careful customisation and construction (we are working within a well-defined platform so that has a great deal of influence and assistance).

We’ve already got support roles allocated to staff and students will see us on the course, in the forums, and helping out. One of the reasons that we tried to look into the future for student numbers was to work out how we would support students at this scale!

One of our most important things to remember is that completion may not mean anything in the on-line format. Someone comes on and gets an answer to the most pressing question that is holding them back from coding, but in the first week? That’s great. That’s success! How we measure that, and turn that into traditional numbers that match what we do in face-to-face, is going to be something we deal with as we get more information.

The whole team is raring to go and the launch point is so close. We’re looking forward to working with thousands of students, all over the world, for the next six weeks.

Sound interesting? Come and join us!

Musing on Industrial Time

Posted: April 20, 2015 Filed under: Education, Opinion | Tags: advocacy, authenticity, blogging, community, curriculum, design, education, educational problem, educational research, ethics, higher education, in the student's head, learning, measurement, principles of design, reflection, resources, student perspective, teaching, teaching approaches, thinking, time banking, universal principles of design, work/life balance, workload 3 CommentsI caught up with a good friend recently and we were discussing the nature of time. She had stepped back from her job and was now spending a lot of her time with her new-born son. I have gone to working three days a week, hence have also stepped back from the five-day grind. It was interesting to talk about how this change to our routines had changed the way that we thought of and used time. She used a term that I wanted to discuss here, which was industrial time, to describe the clock-watching time of the full-time worker. This is part of the larger area of time discipline, how our society reacts to and uses time, and is really quite interesting. Both of us had stopped worrying about the flow of time in measurable hours on certain days and we just did things until we ran out of day. This is a very different activity from the usual “do X now, do Y in 15 minutes time” that often consumes us. In my case, it took me about three months of considered thought and re-training to break the time discipline habits of thirty years. In her case, she has a small child to help her to refocus her time sense on the now.

Modern time-sense is so pervasive that we often don’t think about some of the underpinnings of our society. It is easy to understand why we have years and, although they don’t line up properly, months given that these can be matched to astronomical phenomena that have an effect on our world (seasons and tides, length of day and moonlight, to list a few). Days are simple because that’s one light/dark cycle. But why there are 52 weeks in a year? Why are there 7 days in a week? Why did the 5-day week emerge as a contiguous block of 5 days? What is so special about working 9am to 5pm?

A lot of modern time descends from the struggle of radicals and unionists to protect workers from the excesses of labour, to stop people being worked to death, and the notion of the 8 hour day is an understandable division of a 24 hour day into three even chunks for work, rest and leisure. (Goodness, I sound like I’m trying to sell you chocolate!)

If we start to look, it turns out that the 7 day week is there because it’s there, based on religion and tradition. Interestingly enough, there have been experiments with other week lengths but it appears hard to shift people who are used to a certain routine and, tellingly, making people wait longer for days off appears to be detrimental to adoption.

If we look at seasons and agriculture, then there is a time to sow, to grow, to harvest and to clear, much as there is a time for livestock to breed and to be raised for purpose. If we look to the changing time of sunrise and sunset, there is a time at which natural light is available and when it is not. But, from a time discipline perspective, these time systems are not enough to be able to build a large-scale, industrial and synchronised society upon – we must replace a distributed, loose and collective notion of what time is with one that is centralised, authoritarian and singular. While religious ceremonies linked to seasonal and astronomical events did provide time-keeping on a large scale prior to the industrial revolution, the requirement for precise time, of an accuracy to hours and minutes, was not possible and, generally, not required beyond those cues given from nature such as dawn, noon, dusk and so on.

After the industrial revolution, industries and work was further developed that was heavily separated from a natural linkage – there are no seasons for a coal mine or a steam engine – and the development of the clock and reinforcement of the calendar of work allowed both the measurement of working hours (for payment) and the determination of deadlines, given that natural forces did not have to be considered to the same degree. Steam engines are completed, they have no need to ripen.

With the notion of fixed and named hours, we can very easily determine if someone is late when we have enough tools for measuring the flow of time. But this is, very much, the notion of the time that we use in order to determine when a task must be completed, rather than taking an approach that accepts that the task will be completed at some point within a more general span of time.

We still have confusion where our understanding of “real measures” such as days, interact with time discipline. Is midnight on the 3rd of April the second after the last moment of April the 2nd or the second before the first moment of April the 4th? Is midnight 12:00pm or 12:00am? (There are well-defined answers to this but the nature of the intersection is such that definitions have to be made.)

But let’s look at teaching for a moment. One of the great criticisms of educational assessment is that we confuse timeliness, and in this case we specifically mean an adherence to meeting time discipline deadlines, with achievement. Completing the work a crucial hour after it is due can lead to that work potentially not being marked at all, or being rejected. But we do usually have over-riding reasons for doing this but, sadly, these reasons are as artificial as the deadlines we impose. Why is an Engineering Degree a four-year degree? If we changed it to six would we get better engineers? If we switched to competency based training, modular learning and life-long learning, would we get more people who were qualified or experienced with engineering? Would we get less? What would happen if we switched to a 3/1/2/1 working week? Would things be better or worse? It’s hard to evaluate because the week, and the contiguous working week, are so much a part of our world that I imagine that today is the first day that some of you have thought about it.

Back to education and, right now, we count time for our students because we have to work out bills and close off accounts at end of financial year, which means we have to meet marking and award deadlines, then we have to project our budget, which is yearly, and fit that into accredited degree structures, which have year guidelines…

But I cannot give you a sound, scientific justification for any of what I just wrote. We do all of that because we are caught up in industrial time first and we convince ourselves that building things into that makes sense. Students do have ebb and flow. Students are happier on certain days than others. Transition issues on entry to University are another indicator that students develop and mature at different rates – why are we still applying industrial time from top to bottom when everything we see here says that it’s going to cause issues?

Oh, yes, the “real world” uses it. Except that regular studies of industrial practice show that 40 hour weeks, regular days off, working from home and so on are more productive than the burn-out, everything-late, rush that we consider to be the signs of drive. (If Henry Ford thinks that making people work more than 40 hours a week is bad for business, he’s worth listening to.) And that’s before we factor in the development of machines that will replace vast numbers of human jobs in the next 20 years.

I have a different approach. Why aren’t we looking at students more like we regard our grape vines? We plan, we nurture, we develop, we test, we slowly build them to the point where they can produce great things and then we sustain them for a fruitful and long life. When you plant grape vines, you expect a first reasonable crop level in three years, and commercial levels at five. Tellingly, the investment pattern for grapes is that it takes you 10 years to break even and then you start making money back. I can’t tell you how some of my students will turn out until 15-25 years down the track and it’s insanity to think you can base retrospective funding on that timeframe.

You can’t make your grapes better by telling them to be fruitful in two years. Some vines take longer than others. You can’t even tell them when to fruit (although can trick them a little). Yet, somehow, we’ve managed to work around this to produce a local wine industry worth around $5 billion dollars. We can work with variation and seasonal issues.

One of the reasons I’m so keen on MOOCs is that these can fit in with the routines of people who can’t dedicate themselves to full-time study at the moment. By placing well-presented, pedagogically-sound materials on-line, we break through the tyranny of the 9-5, 5 day work week and let people study when they are ready to, where they are ready to, for as long as they’re ready to. Like to watch lectures at 1am, hanging upside down? Go for it – as long as you’re learning and not just running the video in the background while you do crunches, of course!

Once you start to question why we have so many days in a week, you quickly start to wonder why we get so caught up on something so artificial. The simple answer is that, much like money, we have it because we have it. Perhaps it’s time to look at our educational system to see if we can do something that would be better suited to developing really good knowledge in our students, instead of making them adept at sliding work under our noses a second before it’s due. We are developing systems and technologies that can allow us to step outside of these structures and this is, I believe, going to be better for everyone in the process.

Conformity isn’t knowledge, and conformity to time just because we’ve always done that is something we should really stop and have a look at.

That’s not the smell of success, your brain is on fire.

Posted: February 11, 2015 Filed under: Education | Tags: authenticity, collaboration, community, curriculum, design, education, educational problem, educational research, Henry Ford, higher education, in the student's head, industrial research, learning, measurement, multi-tasking, reflection, resources, student perspective, students, teaching, teaching approaches, thinking, time banking, work/life balance, working memory, workload Leave a commentI’ve written before about the issues of prolonged human workload leading to ethical problems and the fact that working more than 40 hours a week on a regular basis is downright unproductive because you get less efficient and error-prone. This is not some 1968 French student revolutionary musing on what benefits the soul of a true human, this is industrial research by Henry Ford and the U.S. Army, neither of whom cold be classified as Foucault-worshipping Situationist yurt-dwelling flower children, that shows that there are limits to how long you can work in a sustained weekly pattern and get useful things done, while maintaining your awareness of the world around you.

The myth won’t die, sadly, because physical presence and hours attending work are very easy to measure, while productive outputs and their origins in a useful process on a personal or group basis are much harder to measure. A cynic might note that the people who are around when there is credit to take may end up being the people who (reluctantly, of course) take the credit. But we know that it’s rubbish. And the people who’ve confirmed this are both philosophers and the commercial sector. One day, perhaps.

But anyone who has studied cognitive load issues, the way that the human thinking processes perform as they work and are stressed, will be aware that we have a finite amount of working memory. We can really only track so many things at one time and when we exceed that, we get issues like the helmet fire that I refer to in the first linked piece, where you can’t perform any task efficiently and you lose track of where you are.

So what about multi-tasking?

Ready for this?

We don’t.

There’s a ton of research on this but I’m going to link you to a recent article by Daniel Levitin in the Guardian Q&A. The article covers the fact that what we are really doing is switching quickly from one task to another, dumping one set of information from working memory and loading in another, which of course means that working on two things at once is less efficient than doing two things one after the other.

But it’s more poisonous than that. The sensation of multi-tasking is actually quite rewarding as we get a regular burst of the “oooh, shiny” rewards our brain gives us for finding something new and we enter a heightened state of task readiness (fight or flight) that also can make us feel, for want of a better word, more alive. But we’re burning up the brain’s fuel at a fearsome rate to be less efficient so we’re going to tire more quickly.

Get the idea? Multi-tasking is horribly inefficient task switching that feels good but makes us tired faster and does things less well. But when we achieve tiny tasks in this death spiral of activity, like replying to an e-mail, we get a burst of reward hormones. So if your multi-tasking includes something like checking e-mails when they come in, you’re going to get more and more distracted by that, to the detriment of every other task. But you’re going to keep doing them because multi-tasking.

I regularly get told, by parents, that their children are able to multi-task really well. They can do X, watch TV, do Y and it’s amazing. Well, your children are my students and everything I’ve seen confirms what the research tells me – no, they can’t but they can give a convincing impression when asked. When you dig into what gets produced, it’s a different story. If someone sits down and does the work as a single task, it will take them a shorter time and they will do a better job than if they juggle five things. The five things will take more than five times as long (up to 10, which really blows out time estimation) and will not be done as well, nor will the students learn about the work in the right way. (You can actually sabotage long term storage by multi-tasking in the wrong way.) The most successful study groups around the Uni are small, focused groups that stay on one task until it’s done and then move on. The ones with music and no focus will be sitting there for hours after the others are gone. Fun? Yes. Efficient? No. And most of my students need to be at least reasonably efficient to get everything done. Have some fun but try to get all the work done too – it’s educational, I hear. 🙂

It’s really not a surprise that we haven’t changed humanity in one or two generations. Our brains are just not built in a way that can (yet) provide assistance with the quite large amount of work required to perform multi-tasking.

We can handle multiple tasks, no doubt at all, but we’ve just got to make sure, for our own well-being and overall ability to complete the task, that we don’t fall into the attractive, but deceptive, trap that we are some sort of parallel supercomputer.

5 Things I would Like My Students to Be Able to Perceive

Posted: January 25, 2015 Filed under: Education | Tags: advocacy, assumptions, authenticity, blogging, curriculum, education, ethics, higher education, perception, privilege, student, student perspective, thinking, tone Leave a commentOur students will go out into the world and will be exposed to many things but, if we have done our job well, then they will not just be pushed around by the pressure of the events that they witness, but they will be able to hold their ground and perceive what is really going on, to place their own stamp on the world.

I don’t tell my students how to think, although I know that it’s a commonly held belief that everyone at a Uni tries to shape the political and developmental thought of their students, I just try to get them to think. This is probably going to have the side effect of making them thoughtful, potentially even critical of things that don’t make sense, and I realise that this is something that not everybody wants from junior citizens. But that’s my job.

Here is a list of five things that I think I’d like a thoughtful person to be able to perceive. It’s not the definitive five or the perfect five but these are the ones that I have today.

- It would be nice if people were able to reliably tell the difference between 1/3 and 1/4 and understand that 1/3 is larger than 1/4. Being able to work out the odds of things (how likely they are) require you to be able to look at two things that are smaller than one and get them in the right order so you can say “this is more likely than that”. Working on percentages can make it easier but this requires people to do division, rather than just counting things and showing the fraction.But I’d like my students to be able to perceive how this can be a fundamental misunderstanding that means that some people can genuinely look at comparative probabilities and not be able to work out that this simple mathematical comparison is valid. And I’d like them to be able to think about how to communicate this to help people understand.

- A perceptive person would be able to spot when something isn’t free. There are many people who go into casinos and have a lot of fun gambling, eating very cheap or unlimited food, staying in cheap hotels and think about what a great deal it is. However, every game you play in a casino is designed so that casinos do not make a loss – but rather than just saying “of course” we need to realise that casinos make enough money to offer “unlimited buffet shrimp” and “cheap luxury rooms” and “free luxury for whales” because they are making so much money. Nothing in a casino is free. It is paid for by the people who lose money there.This is not, of course, to say that you shouldn’t go and gamble if you’re an adult and you want to, but it’s to be able to see and clearly understand that everything around you is being paid for, if not in a way that is transparently direct. There are enough people who suffer from the gambler’s fallacy to put this item on the list.

- A perceptive person would have a sense of proportion. They would not start issuing death threats in an argument over operating systems (or ever, preferably) and they would not consign discussions of human rights to amusing after-dinner conversation, as if this was something to be played with.

- A perceptive person would understand the need to temper the message to suit the environment, while still maintaining their own ethical code regarding truth and speaking up. But you don’t need to tell a 3-year old that their painting is awful any more than you need to humiliate a colleague in public for not knowing something that you know. If anything, it makes the time when you do deliver the message bluntly much more powerful.

- Finally, a perceptive person would be able to at least try to look at life through someone else’s eyes and understand that perception shapes our reality. How we appear to other people is far more likely to dictate their reaction than who we really are. If you can’t change the way you look at the world then you risk getting caught up on your own presumptions and you can make a real fool of yourself by saying things that everyone else knows aren’t true.

There’s so much more and I’m sure everyone has their own list but it’s, as always, something to think about.