Reflecting on rewards – is Time Banking a reward or a technique?

Posted: July 29, 2012 Filed under: Education | Tags: education, educational problem, educational research, feedback, higher education, in the student's head, measurement, reflection, student perspective, teaching, teaching approaches, thinking, time banking, tools, universal principles of design Leave a commentEnough advocacy for a while, time to think about research again! Given that I’ve just finished Alfie Kohn’s Punished by Rewards, and more on that later, I’ve been looking very carefully at everything I do with students to work out exactly what I am trying to do. One of Kohn’s theses is that we tend to manipulate people towards compliance through extrinsic tools such as incentives and rewards, rather than provide an environment in which their intrinsic motivational aspects dominate and they are driven to work through their own interest and requirements. Under Kohn’s approach, a gold star for sitting quietly achieve little except to say that sitting quietly must be so bad that you need to be bribed, and developing a taste for gold stars in the student. If someone isn’t sitting quietly, is it because they haven’t managed sitting quietly (the least rewarding unlockable achievement in any game) or that they are disengaged, bored or failing to understand why they are there? Is it, worse, because they are trying to ask questions about work that they don’t understand or because they are so keen to discuss it that they want to talk? Kohn wants to know WHY people are or aren’t doing things rather than just to stop or start people doing things through threats and bribery.

Where, in this context, does time banking fit? For those who haven’t read me on this before, time banking is described in a few posts I’ve made, with this as one of the better ones to read. In summary, students who hand up work early (and meet a defined standard) get hours in the bank that they can spend at a later date to give themselves a deadline extension – and there are a lot of tuneable parameters around this, but that’s the core. I already have a lot of data that verifies that roughly a third of students hand in on the last day and 15-18% hand up late. However, the 14th highest hand-in hour is the one immediately after the deadline. There’s an obvious problem where people aren’t giving themselves enough time to do the work but “near-missing” by one hour is a really silly way to lose marks. (We won’t talk about the pedagogical legitimacy of reducing marks for late work at the moment, that’s a related post I hope to write soon. Let’s assume that our learning design requires that work be submitted at a certain time to reinforce knowledge and call that the deadline – the loss, as either marks or knowledge reinforcement, is something that we want to avoid.)

But, by providing a “reward” for handing up early, am I trying to bribe my students into behaviour that I want to see? I think that the answer is “no”, for reasons that I’ll go into.

Firstly, the fundamental concept of time banking is that students have a reason to look at their assignment submission timetable as a whole and hand something up early because they can then gain more flexibility later on. Under current schemes, unless you provide bonus points, there is no reason for anyone to hand up more than one second early – assuming synchronised clocks. (I object to bonus points for early hand-in for two reasons: it is effectively a means to reward the able or those with more spare time, and because it starts to focus people on handing up early rather than the assignment itself.) This, in turn, appears to lead to a passive, last minute thinking pattern and we can see the results of that in our collected assignment data – lots and lots of near-miss late hand-ins. Our motivation is to focus the students on the knowledge in the course by making them engage with the course as a whole and empowering themselves into managing their time rather than adhering to our deadlines. We’re not trying to control the students, we’re trying to move them towards self-regulation where they control themselves.

Secondly, the same amount of work has to be done. There is no ‘reduced workload’ for handing in early, there is only ‘increased flexibility’. Nobody gets anything extra under this scheme that will reinforce any messages of work as something to be avoided. The only way to get time in the bank is to do the assignments – it is completely linked to the achievement that is the core of the course, rather than taking focus elsewhere.

Thirdly, a student can choose not to use it. Under almost every version of the scheme I’ve sketched out, every student gets 6 hours up their sleeve at the start of semester. If they want to just burn that for six late hand-ins that are under an hour late, I can live with that. It will also be very telling if they then turn out to be two hours late because, thinking about it, that’s a very interesting mental model that they’ve created.

But how is it going to affect the student? That’s a really good question. I think that, the way that it’s constructed, it provides a framework for students to work with, one that ties in with intrinsic motivation, rather than a framework that is imposed on students – in fact, moving away from the rigidly fixed deadlines that (from our data) don’t even to be training people that well anyway is a reduction in manipulate external control.

Will it work? Oh, now, there’s a question. After about a year of thought and discussion, we’re writing it all up at the moment for peer review on the foundations, the literature comparison, the existing evidence and the future plans. I’ll be very interested to see both the final paper and the first responses from our colleagues!

A Flurry of Inauthenticity

Posted: July 27, 2012 Filed under: Education | Tags: authenticity, education, educational problem, higher education, in the student's head, measurement, student perspective, teaching, teaching approaches 2 CommentsI’ve received numerous poorly personalised e-mails recently – today’s was from a company that published some of my work in a book and was addressed as “Dear *TITLE:FNAME*, which made me feel part of the family, I can tell you! Of course this is low hanging fruit because we’re all aware how mail outs actually work. No-one has the mind-numbingly and unnecessarily manual task of sitting down and actually writing these things anymore. They, very sensibly, use a computer to take a repetitive task and automate it. This would be fine, and I have no problem with it, except where we attempt to mimic a genuine concern.

One of the big changes I’ve noticed recently on Qantas, the airline that I do most of my flying with, is that they have noticed that my tickets say “Dr Falkner” and not “Mr Falkner”. For years, they would greet me at the entrance to the plane, look at my ticket and then promptly demonstrate the emptiness of the personal greeting by getting the title wrong. (The title, incidentally, is not a big deal. 99% of my students and colleagues call me ‘Nick’, the remainder resorting to “Dr Nick”. The issue here is that they are attempting to conduct an activity in greeting that is immediately revealed as meaningless.) Over the past two years, however, suddenly everyone is reading the whole ticket and, while it is still an activity akin to saying “Hello, Human”, this is much more reasonable facsimile of a personalised greeting. I note that they did distinguish themselves recently by greeting me as Dr Falkner and my wife, the original Dr Falkner, as (Miss or Mrs, I don’t quite recall) Falkner.

But my mailbox is full of these near misses. Letters from students addressed to Dr Rick Falkner. Many people who write to Professor Falkner, which I get because they’re trying not to offend me but it just goes to show that they haven’t really bothered to look me up. These are all cold calls – surface and shallow, from people who not only don’t know me but, I suspect, they don’t really want to get to know me – they’re just after the “Doctor” part of Nick Falkner. Much as Qantas looked at my Frequent Flyer status and changed their tone based on whether I was Silver or Gold (when you’re Gold, cabin crew come down to have a personal chat with you occasionally, especially if you’re part of a Gold couple flying together. I’m scared to ask what Platinum get), where my name and title were a convenient afterthought, most people who write to Professor Nick Falkner are after that facet of me which is useful to them. This is implicitly manipulative and thoroughly inauthentic.

This is, of course, why I try very hard not to do it with students. I do try to be genuinely concerned with the person, rather than their abilities. There are students I’ve known for years and, were I to walk up to them at a social gathering and be unable to recall anything about them other than their marks, they would have a right to feel exploited and ignored – a small cog in my glorious rise to an average career in Academia. This is, of course, not all that easy, especially when you have my memory but the effort is exceedingly important and a good attempt is often as valuable as a good memory – but a good memory generally comes from caring about something and paying attention. We ask of it our students when we present them with educational experiences. We say “This is important, so please pay attention and you’ll develop useful knowledge” so we’re very open about how we expect people to deal with important things. It is, therefore, much more insulting if we make it obvious that we remember nobody from our classes, or nothing of their lives, or we don’t realise the impact that we have from our privileged position at the centre of the web of knowledge. (Yeah, I think I just called us all spiders. Sorry about that. We’re cool spiders, if that helps.)

There are enough pieces of inauthentic e-mail, flyers, TV ads and day-to-day interactions that already bother us, without adding to the inauthenticity in our relationships with our students and our colleagues. Is it easy? No. Is it worthwhile? Yes. Is it what our students should expect of us to at least attempt? I think, yes, but I’d be interested to know what other people think about this – am I setting the bar too high for us or is this just part of our world?

Environmental Impact: Iz Tweetz changing ur txt?

Posted: July 25, 2012 Filed under: Education | Tags: blogging, education, educational problem, educational research, higher education, learning, measurement, resources, student perspective, teaching, teaching approaches, tools 5 CommentsPlease, please forgive me for the diabolical title but I have been wondering about the effects of saturation in different communication environments and Twitter seemed like an interesting place to start. For those who don’t know about Twitter, it’s an online micro-blogging social media service. Connect to it via your computer or phone and you can put a message in that is up to 140 characters, where each message is called a tweet. What makes Twitter interesting is the use of hashtags and usernames to allow the grouping of these messages by area by theme (#firstworldproblems, if you’re complaining about the service in Business Class, for example) or to respond to someone (@katyperry – Russell Brand, SRSLY?). Twitter has very significant penetration in the celebrity market and there are often “professional” tweeters for certain organisations.

There is a lot more to say about Twitter but what I want to focus on is the maximum number of characters available – 140. This limit was set for compatibility with SMS messages and, unsurprisingly, a lot of abbreviations used in Twitter have come in from the SMS community. I have been restricting myself to ~1,000 words in recent posts (+/-10%, if I’m being honest) and, with the average word length of approximately 5 for English then, by adding spaces and punctuation to take this to 6, you’d expect my posts to be somewhere in the region of 6,000 characters. Anyone who’s been reading this for a while will know that I love long words and technical terms so there’s a possibility that it’s up beyond this. So one of my posts, as the largest Tweets, would take up about 43 tweets. How long would that take the average Twitterer?

Here’s an interesting site that lists some statistics, from 2009 – things will have changed but it’s a pretty thorough snapshot. Firstly, the more followers you have the more you tweet (cause and effect not stated!) but even then, 85% of users update less than once per day, with only 1% updating more than 10 times per day. With the vast majority of users having less than 100 followers (people who are subscribed to read all of your tweets), this makes two tweets per day the dominant activity. But that was back in 2009 and Twitter has grown considerably since then. This article updates things a little, but not in the same depth, and gives us two interesting facts. Firstly, that Twitter has grown amazingly since 2009. Secondly, that event reporting now takes place on Twitter – it has become a news and event dissemination point. This is happening to the extent that a Twitter reported earthquake can expand outwards in the same or slightly less time than the actual earthquake itself. This has become a bit of a joke, where people will tweet about what is happening to them rather than react to the event.

From Twitter’s own blog, March, 2011, we can also see this amazing growth – more people are using Twitter and more messages are being sent. I found another site listing some interesting statistics for Twitter: 225,000,000 users, most tweets are 40 characters long, 40% if users don’t tweet but just read and the average user still has around 100 followers (115 actually). If the previous behaviour patterns hold, we are still seeing an average of two tweets for the majority user who actually posts. But a very large number of people are actually reading Twitter far more than they ever post.

To summarise, millions of people around the world are exposed to hundreds of messages that are 4o characters long and this may be one of their leading sources of information and exposure to text throughout the day. To put this in context, it would take 150 tweets to convey one of my average posts at the 40 character limit and this is a completely different way of reading information because, assuming that the ‘average’ sentence is about 15-20 words, very few of these tweets are going to be ‘full’ sentences. Context is, of course, essential and a stream of short messages, even below sentence length, can be completely comprehensible. Perhaps even sentence fragments? Or three words. Two words? One? (With apologies to Hofstadter!) So there’s little mileage in arguing that tweeting is going to change our semantic framework, although a large amount of what moves through any form of blogging, micro or other, is going to always have its worth judged by external agents who don’t take part in that particular activity and find it wanting. (I blog, you type, he/she babbles.)

But is this shortening of phrase, and our immersion in a shorter sentence structure, actually having an impact on the way that we write or read? Basically, it’s very hard to tell because this is such a recent phenomenon. Early social media sites, including the BBs and the multi-user shared environments, did not value brevity as much as they valued contribution and, to a large extent, demonstration of knowledge. There was no mobile phone interaction or SMS link so the text limit of Twitter wasn’t required. LiveJournal was, if anything, the antithesis of brevity as the journalling activity was rarely that brief and, sometimes, incredibly long. Facebook enforces some limits but provides notes so that longer messages can be formed but, of course, the longer the message, the longer the time it takes to write.

Twitter is an encourager of immediacy, of thought into broadcast, but this particular messaging mode, the ability to globally yell “I like ice cream and I’m eating ice cream” as one is eating ice cream is so new that any impact on overall language usage is going to be hard to pin down. As it happens, it does appear that our sentences are getting shorter and that we are simplifying the language but, as this poster notes, the length of the sentence has shrunk over time but the average word length has only slightly shortened, and all of this was happening well before Twitter and SMS came along. If anything, perhaps this indicates that the popularity of SMS and Twitter reflects the direction of language, rather than that language is adapting to SMS and Twitter. (Based on the trend, the Presidential address of 2300 is going to be something along the lines of “I am good. The country is good. Thank you.”)

I haven’t had the time that I wanted to go through this in detail, and I certainly welcome more up-to-date links and corrections, but I much prefer the idea that our technologies are chosen and succeed based on our existing drives tastes, rather than the assumption that our technologies are ‘dumbing us down’ or ‘reducing our language use’ and, in effect, driving us. I guess you may say I’m a dreamer.

(But I’m not the only one!)

A Design Challenge, a Grand Design Challenge, if you will.

Posted: July 18, 2012 Filed under: Education | Tags: education, educational problem, educational research, feedback, Generation Why, grand challenge, higher education, in the student's head, measurement, principles of design, student perspective, teaching, teaching approaches, thinking, tools, universal principles of design, vygotsky, work/life balance 1 CommentQuestion: What is one semester long, designed as a course for students who perform very well academically, has no prerequisites and can be taken by students with no programming exposure and by students with a great deal of programming experience?

Answer: I don’t know but I’m teaching it on Monday.

While I talk about students who perform well academically, this is for the first instance of this course. My goal is that any student can take this course, in some form, in the future.

The new course in our School, Grand Challenges in Computer Science, is part of our new degree structure, the Bachelor of Computer Science (Advanced). This adds lot more project work and advanced concepts, without disrupting the usual (and already excellent) development structure of the degree. One of the challenges of dealing with higher-performing students is keeping them in a sufficiently large and vibrant peer group while also addressing the minor problem that they’re moving at a different pace to many people that they are friends with. Our solution has been to add additional courses that sit outside of the main progression but still provide interesting material for these students, as well as encouraging them to take a more active role in the student and general community. They can spend time with their friends, carry on with their degrees and graduate at the same time, but also exercise themselves to greater depth and into areas that we often don’t have time to deal with.

In case you’re wondering, I know that some of my students read this blog and I’m completely comfortable talking about the new course in this manner because (a) they know that I’m joking about the “I don’t know” from the Answer above and (b) I have no secrets regarding this course. There are some serious challenges facing us as a species. We are now in a position where certain technologies and approaches may be able to help us with this. One of these is the notion of producing an educational community that can work together to solve grand challenges and these students are very much a potential part of this new community.

The biggest challenge for me is that I have such a wide range of students. I have students who potentially have no programming background and students who have been coding for four years. I have students who are very familiar with the School’s practices and University, and people whose first day is Monday. Of course, my solution to this is to attack it with a good design. But, of course, before a design, we have to know the problem that we’re trying to solve.

The core elements of this course are the six grand challenges as outlined but he NSF, research methods that will support data analysis, the visualisation of large data sources as a grand challenge and community participation to foster grand challenge communities. I don’t believe that a traditional design of lecturing is going to support this very well, especially as the two characteristics that I most want to develop in the students are creativity and critical thinking. I really want all of my students to be able to think their way around, over or through an obstacle and I think that this course is going to be an excellent place to be able to concentrate on this.

I’ve started by looking at my learning outcomes for this course – what do I expect my students to know by the end of this course? Well, I expect them to be able to tell me what the grand challenges are, describe them, and then provide examples of each one. I expect them to be able to answer questions about key areas and, in the areas that we explore in depth, demonstrate this knowledge through the application of relevant skills, including the production of assignment materials to the best of their ability, given their previous experience. Of course, this means that every student may end up performing slightly differently, which immediately means that personalised assessment work (or banded assessment work) is going to be required but it also means that the materials I use will need to be able to support a surface reading, a more detailed reading and a deep reading, where students can work through the material at their own pace.

I don’t want the ‘senior’ students to dominate, so there’s going to have be some very serious scaffolding, and work from me, to support role fluidity and mutual respect, where the people leading discussion rotate to people supporting a point, or critiquing a point, or taking notes on the point, to make sure that everyone gets a say and that we don’t inhibit the creativity that I’m expecting to see in this course. I will be setting standards for projects that take into account the level of experience of each person, discussed and agreed with the student in advance, based on their prior performance and previous knowledge.

What delights me most about this course is that I will be able to encourage people to learn from each other. Because the major assessment items are all unique to a student, then sharing knowledge will not actually lead to plagiarism or copying. Students will be actively discouraged from doing work for each other but, in this case, I have no problem in students helping each other out – as long as the lion’s share of the work is done by the main student. (The wording of this is going to look a lot more formal but that’s a Uni requirement. To quote “The Castle”, “It’s about the vibe.”) Students will regularly present their work for critique and public discussion, with their response to that critique forming a part of their assessment.

I’m trying to start these students thinking about the problems that are out there, while at the same time giving them a set of bootstrapping tools that can set them on the path to investigation and (maybe) solution well ahead of the end of their degrees. This then feeds into their project work in second and third year. (And, I hope, for at least some of them, Honours and maybe PhD beyond.)

Writing this course has been a delight. I have never had so much excuse to buy books and read fascinating things about challenging issues and data visualisation. However, I think that it will be the student’s response to this that will give me something that I can then share with other people – their reactions and suggestions for improvement will put a seal of authenticity on this that I can then pack up, reorganise, and put out into the world as modules for general first year and high school outreach.

I’m very much looking forward to Monday!

Wrath of Kohn: Well, More Thoughts on “Punished by Rewards”

Posted: July 16, 2012 Filed under: Education | Tags: education, educational problem, feedback, games, higher education, in the student's head, measurement, principles of design, reflection, student perspective, teaching, teaching approaches, thinking, universal principles of design, work/life balance, workload 1 CommentYesterday, I was discussing my reading of Alfie Kohn’s “Punished by Rewards” and I was talking about a student focus to this but today I want to talk about the impact on staff. Let me start by asking you to undertake a quick task. Let’s say you are looking for a new job, what are the top ten things that you want to get from it? Write them down – don’t just think about them, please – and have them with you. I’ll put a picture of Kohn’s book here to stop you looking ahead. 🙂

It’s ok, I’ll wait. Written your list?

How far up the list was “Money”? Now, if you wrote money in the top three, I want you to imagine that this new job will pay you a fair wage for what you’re going to do and you won’t have any money troubles. (Bit of a reach, sometimes, I know but please give it a try.) With that in mind, look at your list again.

Does the word “excellent incentive scheme” or “great bonus package” figure anywhere on that list? If it does, is it in the top half or the bottom half? If Money wasn’t top three, where was it for you?

According to Kohn, very few people actually to make money the top of their list – it tends to be things like ‘type of work’, ‘interesting job’, ‘variety’, ‘challenge’ and stuff like that. So, if that’s the case, why do so many work incentive schemes revolve around giving us money or bonuses as a reward if, for the majority of the population, it’s not the thing that we want? Well, of course, it’s easy. Giving feedback or mentoring is much harder than a $50 gift card, a $2,000 bonus or 500 shares. What’s worse is, because it’s money, it has to be allocated in an artificial scarcity environment or it’s no longer a bonus, it’s an expectation. If you didn’t do this, then the company might go bankrupt.

What if, instead, when you did something really good, you received something that made it easier for you to do all of your work as a recognition of the fact that you’re working a lot? Of course, this would require your manager to have a really good idea of what you were doing and how to improve it, as well as your company being willing to buy you that backlit keyboard with the faux-mink armrest that will let you write reports without even a twinge of arm strain. Some of this, obviously, is part of minimum workplace standards but the idea is that you get something that reflects that your manager understands what you’ve done and is trying to help you to develop further. Carefully selected books, paid trips to useful training, opportunities to further display your skill – all of these are probably going to achieve more of the items on your 10-point list than money will. To quote Kohn, quoting Gruenberg (1980), “The Happy Worker: An Analysis of Educational and Occupational Differences in Determinants of Job Satisfaction”, American Journal of Sociology, 86, pp 267-8:

“Extrinsic rewards become an important determinant of overall job satisfaction only among workers for whom intrinsic rewards are relatively unavailable.”

There are, Kohn argues, many issues with incentive schemes as reward and one of these is the competitive environment that it fosters. I discussed this yesterday so I’ll move to one of the other, which is focusing on meeting the requirements for reward at the expense of quality and in a way that is as safe as possible. Let me give you an example that I recently encountered outside of work: Playing RockBand or SingStar (music games that score your performance). Watch me and my friends who actually sing playing a singing game: yes, we notice the score, but we don’t care about the score. We interpret, we mess around, we occasionally affect the voices of the Victorian-era female impersonator characters from Little Britain. Then watch other groups of people who are playing the game to make the highest score. They don’t interpret. They don’t harmonise spontaneously. In many cases, they barely even sing and focus on making the minimum tunefully accurate noise possible at exactly the right time, having learned the sequence, to achieve the highest score. The quality of the actual singing is non-existent, because this isn’t singing, it’s score-maximisation. Similarly, risk taking has been completely removed. (As an aside, I have excellent pitch and, to my ears, most people who try to maximise their score sound out of tune because they are within the tolerances that the game accepts, but by choosing not to actually sing, there is no fundamental thread of musicality that runs through their performance. I once saw a professional singer deliver a fantastic version of a song, only to be rated as mediocre by the system,)

On Saturday, my wife and I went to the Melbourne-based Australian Centre for the Moving Image (ACMI) to attend the Game Masters gaming exhibition. It was fantastic, big arcade section and tons of great stuff dedicated to gaming. (Including the design document for Deus Ex!) There were lots of games to play, including SingStar (Scored karaoke), RockBand (multi-instrument band playing with feedback and score) and some dancing games. Going past RockBand, Katrina pointed out how little fun the participants appeared to be having and, on looking at it, it was completely true. The three boys in there were messing around with pseudo-musical instruments but, rather than making a loud and joyful noise, they were furrowed of brow and focused on doing precisely the right things at the right times to get positive feedback and a higher score. Now, there are many innovations emerging in this space and it is now possible to explore more and actually take some risks for innovation, but from industry and from life experience, it’s pretty obvious that your perception of what you should be doing and where the reward is going to come from make a huge difference.

If your reward is coming from someone/something else, and they set a bar of some sort, you’re going to focus on reaching that bar. You’re going to minimise the threats to not reaching that bar by playing it safe, colouring inside the lines, trying to please the judge and then, if you don’t get that reward, you’re far more likely to stop carrying out that activity, even if you loved it before. And, boy, if you don’t get that reward, will you feel punished.

I’m not saying Kohn is 100% correct, because frankly I don’t know and I’m not a behaviourist, but a lot of this rings true from my own experience and his use of the studies included in his book, as well as the studies themselves, are very persuasive. I look forward to some discussion on these points!

The Big Picture and the Drug of Easy Understanding: Part II (Eclectic Boogaloo)

Posted: July 10, 2012 Filed under: Education, Opinion | Tags: authenticity, education, educational problem, ethics, feedback, fiero, games, higher education, measurement, student perspective, teaching, teaching approaches, thinking, universal principles of design Leave a commentIn yesterday’s post, I talked about the desire to place work into some sort of grand scheme, referring to movies and films, and illustrating why it’s hard to guarantee consistency from a sketch of your strategy unless you implement everything before you make it available to people. While building upon previous work is very useful, as I’m doing now, if you want to keep later works short by referring back to a shared context established in a previous work, it does make you susceptible to inconsistency if a later work makes you realise that assumptions in a previous work were actually wrong. As I noted in yesterday’s post, I’m actually writing these posts side by side and scheduling them for later, to ensure that I don’t make any more mistakes than I have to, which I can’t easily correct because the work is already displayed.

Strategic approaches to the construction of long term and complex works are essential, but a strategic plan needs to be sufficiently detailed in order to guide the works produced from it. You might get away with an abstract strategy if you produce all of the related works at one time and view them together. But, assuming that works are so long term that they can’t be produced in one sitting, you don’t want to have to seriously revise previous productions or, worse, change the strategy. This is particularly damaging when you are working with students because any significant change to the knowledge construction that you’ve been working with is going to cost you a lot of credibility and risk a high level of disengagement. Students will tolerate an amount of honest mistake, assuming that you are honest and that it is a mistake, but they tend to be very judgmental regarding poor time planning and what they perceive as laziness.

And that, in my opinion, is completely fair because we tend not to allow them poor time planning either. Going into an examination with a misunderstanding of the details of the overlying strategy will result in a non-negotiable fail, not extended understanding from the marking groups who are looking at examination performance. For me, this is an issue of professional ethics in that a consistent and fair delivery of teaching materials will facilitate learning, firstly by keeping the knowledge pathways ‘clean’ but also by establishing a relationship that you are working as hard to be fair to the student as you can, hence their effort is not wasted and you establish a bond of trust.

Now while I would love to say that this means that I have written every lecture completely before starting a new course, this would not be the truth. But this does mean that my strategic planning for new works and knowledge is broken down to a fairly fine grain plan before I start the course running. I wrote a new course last semester and the overall course had been broken up by area, sub-area, learning outcome and was built with all practicals, tutorials and activities clearly indicated. I had also spent a long time identifying the design of the overall course and the focus that we would be taking throughout, down to the structure of every lecture. When it came to writing the lectures themselves, I knew which lectures would contain ‘achievement’ items (the drug aspect where students get a buzz from the “A-ha!” moment), I knew where the pivotal points were and I’d also spent some time working out which skills I could expect in this group, and which skills later courses would expect from them.

We do have a big picture for teaching our students, in that they are part of a particular implementation of a degree that will qualify them in such-and-such a discipline. We can see the discipline syllabi, current learning and teaching practices, our local requirements and the resources that we have to carry all of this out. But this is no longer a strategy and, the more I worked with things, the more I realised that I had produced a tactical (or operational) plan for each week of the lectures – and I had to be diligent about this because one third of my lectures were being given by someone who was a new lecturer. So, on top of all the planning, every lecture had to be self-contained and instructionally annotated so that a new lecturer, with some briefing from me, could carry it out. And it all had to fit together so that structurally, semantically and stylistically, it all looked like one smooth flow.

Had I left the strategic planning to one side, in either not pursuing it or in leaving it too late, or had I not looked at all of the strategic elements that I had to consider, then my operational plan for each week would have been ad hoc or non-existent. Worse, it may have been an unattainable plan; a waste of my time and the students’ efforts. We have far less excuse than George Lucas does for pretending that Star Wars was part of some enormous nine movie vision – although, to be fair, it doesn’t mean that this wasn’t somewhere in his head, but it obviously wasn’t sufficiently well plotted to guarantee a required level of consistency to make us really believe that statement.

The Big Picture is a framing that helps certain creative works drag you in and make more money, whereas in other words it is a valid structure that supports and develops consistency within a shared context. Our work as educators fits squarely into the final category. Without a solid plan, we risk making short-sighted decisions that please us or the student with ‘easy’ reward activities or the answers that come to hand at the time.

I’m not saying that certain elements have to be left out of our teaching, or that we have to be rigid in an inflexible structure, but consistency and reliability are two very important aspects of gaining student trust and, if holding it together over six serial instalments is too hard for Stephen King, then trying to achieve this, without some serious and detailed planning, over 36 lectures spanning four months is probably too much for most of us. The Big Picture, for us, is something that I believe we can find and use very effectively to make our teaching even better, effectively reducing our workload throughout the semester because we don’t have to carry out massive revisions or fixes, with a little more investment of time up front.

(Afterthought: I had no idea that Dr Steele has released an album called “Eclectic Boogaloo”. I was riffing on the old “Breakin’ 2: Electric Boogaloo” thing. In my defence, it was the 80s and we all looked like this:

)

The Big Picture and the Drug of Easy Understanding: Part I

Posted: July 9, 2012 Filed under: Education, Opinion | Tags: authenticity, education, educational problem, feedback, fiero, games, Generation Why, higher education, measurement, principles of design, reflection, student perspective, teaching, teaching approaches, thinking, tools, universal principles of design Leave a commentThere is a tendency to frame artistic works such as films and books inside a larger frame. It’s hard to find a fantasy novel that isn’t “Book 1 of the Mallomarion Epistemology Cycle” or a certain type of mainstream film that doesn’t relate to a previous film (as II, III or higher) or as a re-interpretation of a film in the face of another canon (the re-re-reboot cycle). There are still independent artistic endeavours within this, certainly, but there is also a strong temptation to assess something’s critical success and then go on to make another version of it, in an attempt to make more money. Some things were always multi-part entities in the planning and early stages (such as the Lord of the Rings books and hence movies), some had multiplicity thrust upon them after unlikely success (yes, Star Wars, I’m looking at you, although you are strangely similar to Hidden Fortress so you aren’t even the start point of the cycle).

From a commercial viewpoint, selling something that only sells itself is nowhere near as interesting as selling something that draws you into a consumption cycle. This does, however, have a nasty habit of affecting the underlying works. You only have to look at the relative length of the Harry Potter books, and the quality of editing contained within, to realise that Rowling reached a point where people stopped cutting her books down – even if that led to chapters of aimless meandering in a tent in later books. Books one to three are, to me, far, far better than the later ones, where commercial influence, the desire to have a blockbuster and the pressure of producing works that would continue to bring in more consumers and potentially transfer better to the screen made some (at least for me) detrimental changes to the work.

This is the lure of the Big Picture – that we can place everything inside a grand plan, a scheme laid out from the beginning, and it will validate everything that has gone before, while including everything that is yet to come. Thus, all answers will be given, our confusion will turn to understanding and we will get that nice warm feeling from wrapping everything up. In many respects, however, the number of things that are actually developed within a frame like this, and remain consistent, is very small. Stephen King experimented with serial writing (short instalments released regularly) for a while, including the original version of “The Green Mile”. He is a very talented and experienced writer and he still found that he had made some errors in already published instalments that he had to either ignore or correct in later instalments. Although he had a clear plan for the work, he introduced errors to public view and he discovered them in later full fleshings of the writing. He makes a note in the book of the Green Mile that one of the most obvious, to him, was having someone scratch their nose with their hand while in a straitjacket. Not having all of the work to look at leaves you open to these kinds of errors, even where you do have a plan, unless you have implemented everything fully before you deploy it.

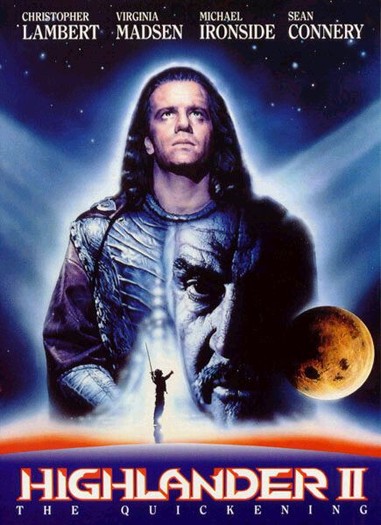

So it’s no surprise that we’re utterly confused by the prequels to Star Wars, because (despite Lucas’ protestations), it is obvious that there was not even a detailed sketch of what would happen. The same can be said of the series “Lost” where any consistency that was able to be salvaged from it was a happy accident, as the writers had no idea what half of the early things actually were – it just seemed cool. And, as far as I’m concerned, there is no movie called Highlander 2.

(I should note that this post is Part 1 of 2, but I am writing both parts side by side, to try and prevent myself from depending in Part 2 upon something that I got wrong in Part 1.)

To take this into an educational space, it is tempting to try and construct learning from a sequence of high-reward moments of understanding. Our students are both delighted and delightful when they “get” something – it’s a joy to behold and one of the great rewards of the teacher. But, much like watching TED talks every day won’t turn you into a genius, it is the total construction of the learning experience that provides something that is consistent throughout and does not have to endure any unexpected reversals or contradictions later on. We don’t have a commercial focus here to hook the students. Instead, we want to keep them going throughout the necessary, but occasionally less exciting, foundation work that will build them up to the point where they are ready to go, in Martin Gardner’s words, “A-ha!”

My problem arises if I teach something that, when I develop a later part of the course, turns out to not provide a complete basis, reinterprets the work in a way that doesn’t support a later point or places an emphasis upon the wrong aspect. Perhaps we are just making the students look at the wrong thing, only to realise later that had we looked at the details, rather than our overall plan, we would have noticed this error. But, now, it is too late and the wrong message is out there.

This is one of the problems of gamification, as I’ve referred to previously, in that we focus on the drug of understanding as a fiero (fierce joy) moment to the exclusion of the actual education experience that the game and reward elements should be reinforcing. This is one of the problems of stating that something is within a structure when it isn’t: any coincidence of aims or correlation of activities is a happy accident, serendipity rather than strategy.

In tomorrow’s post, I’ll discuss some more aspects of this and the implications that I believe it has for all of us as educators.

A Brief Note on the Blog

Posted: July 8, 2012 Filed under: Education | Tags: blogging, education, measurement, reflection, resources, tools, work/life balance, workload, writing 1 CommentMy posts recently have been getting longer and longer and I think I’m hitting the point where ‘prolix’ is an eligible adjective: I’m at risk of using so many words that people may not finish or start reading, or risk being bored by the posts. Despite the fact that I write quickly, it does take some time to write 2,000 words. I want to write the number of words to carry the point across and make the best of your time and my time.

I’m going to experiment with posts that are as informative/useful but that are slightly shorter, aiming for 1,000 words as an upper bound and splitting posts thematically where possible to keep to this. At the end of July, assuming I remember, I’m going to review this to see how it’s going. (The risk, of course, is that editing to keep inside this frame will consume far more time than just writing. Believe me, I’m aware of that one!)

As always, feedback is very welcome and I reserve the right to completely forget about this and start writing 10,000 word megaposts again because I’ve become carried away. Thanks for reading!

HERDSA 2012: Final Keynote, “Connecting with the Other: Some ideas on why Black America likes to sing Bob Dylan”, Professor Liz McKinley

Posted: July 7, 2012 Filed under: Education | Tags: advocacy, authenticity, blogging, collaboration, community, design, education, educational problem, educational research, ethics, feedback, Generation Why, herdsa, higher education, identity, in the student's head, measurement, mr tambourine man, principles of design, protest song, reflection, resources, student perspective, teaching, teaching approaches, thinking 2 CommentsI’ve discussed this final talk in outline but it has had such an impact on me that I wanted to share it in its own post. This also marks the end of my blogging from HERDSA, but I’m sure that you’ve seen enough on this so that’s probably a good thing. (As a note, the next conference that I’ll be at is ICER, in September, so expect some more FrenetoBlogging (TM) then.)

Professor Elizabeth (Liz) McKinley has a great deal of experience in looking at issues of otherness, from her professional role in working with Māori students and postgraduates, and because she is of Ngāti Kahungunu ki Wairarapa and Ngāi Tahu descent herself. She began her talk with a long welcome and acknowledgement speech in an indigenous language (I’m not sure which one it was and I haven’t been able to find out), which she then repeated in English, along with an apology to the local indigenous peoples for her bad pronunciation of some of their words.

She began by musing on Bob Dylan, poet, protest song writer, and why his songs, especially “Blowing in the Wind”, were so popular with African Americans. Dylan’s song, released at a turbulent time in US History, asked a key question: “How many roads must a man walk down, before you call him a man?” At a time when African Americans were barely seen as people in some quarters, despite the Constitutional Amendments that had been made so long before, these lyrics captured the frustrations and aspirations of the Black people of the US and it became, in Professor McKinley’s opinion, anthemic in the civil rights movement because of this. She then discussed how many of Bob Dylan’s other songs had been reinterpreted, repurposed, and moved into the Black community, citing “Mr Tambourine Man” as covered by Con Funk Shun as an example of this. (I have been unable to locate this on Youtube or my usual sources but, I’ve been told, it’s not the version that you’re used to and it has an entirely new groove.)

Reinterpretation pays respect to the poet but we rediscover new aspects about the work and the poet and ourselves when we work with another artist. We learn from each other when we share and we see each other’s way of doing things. These are the attributes that we need to adopt if we want to bring in more underrepresented and disadvantaged students from outside of our usual groups – the opportunities to bring their talents to University to share them with us.

She then discussed social justice education in a loose overview: the wide range of pedagogies that are designed to ameliorate the problems caused by unfair practices and marginalisation. Of course, to be marginalised and to be discriminated against, we must have a dominant (or accepted) form, and an other. It is the Other that was a key aspect of the rest of the talk.

The Other can be seen in two very distinct ways. There is the violent Other, the other that we are scared of, that physically repels us, that we hide from and seek to destroy, sideline or ignore. This is drive by social division and inequity. When Gil Scott-Heron sang of the Revolution that wouldn’t be televised, he was speaking to his people who, according to people who look like me, were a violent and terrifying Otherness that lived in the shadows of every city in America. People are excluded when they don’t fit the mainstream thinking, when we’re scared of them – but we can seek to understand the other’s circumstances, which are usually a predicament, to understand their actions and motivations so that we can ameliorate or remedy them.

But there is also the non-violent Other, a philosophical separation, independent of social factors. We often accept this Other, letting it be different and even seeking knowledge from this unknowable other and, rather than classify it as something to be shunned or feared, we defer our categorisation. My interpretation of this non-violent other is perhaps that of those who seek religious orders, at the expense of married life, even small possessions or a personal life within a community that they control. In many regards this is very much an Otherness but we have tolerated and welcomed the religiously Other into our lives for millennia. It has only been reasonably recently that aspects of this, for certain religious orders, has now started to associate a violent Otherness with the mystical and philosophical Otherness that we would usually associate with clerics.

Professor McKinley went on to identify some of the Others in Australia and New Zealand: the disadvantaged, those living in rural or remote areas, the indigenous peoples. Many of the benchmarks for these factors are set against nations like the UK, the US and Canada. She questioned why, given how different our nations are, we benchmarked ourselves against the UK but identified that all of this target setting, regardless of which benchmarks were in use, were set against majority groups that were largely metropolitan/urban and non-indigenous. In New Zealand, the indigenous groups are the Māori and the Pacific Islanders (PI), but there is recognition that there is a large degree of co-location between these peoples and the lower socio-economic status groups – a double whammy as far as Otherness goes compared to affluent white culture.

Professor McKinley has been heavily involved and leading three projects, although she went to great lengths to thank the many people who were making it all work while she was, as she said, running around telling everyone about it. These three projects were the Starpath Project, the Māori and Indigenous (MAI) Doctoral Programme, and the Teaching and Learning in the Supervision of Māori PhD students (TLRI).

The Starpath Project was designed to undertake research and develop and evaluated evidence-based initiatives, designed to improve educational participation and achievement of students from groups currently under-represented in degree level education. This focuses on the 1st decile schools in NZ, those who fall into the bottom 10%, which includes a high proportion of Māori and PI students. The goal was to increase the number of these students who went into Uni out of school, which is contrary to the usual Māori practice of entering University as mature age students when they have a complexity in their life that drives them to seek University (Liz’s phrase, which I really like).

New Zealand is trying to become a knowledge economy, as they have a small population on a relatively small country, and they want more people in University earlier. While the Pākehā, those of European descent, make up most of those who go to Uni, the major population growth is the Māori and PI communities. There are going to be increasingly large economic and social problems if these students don’t start making it to University earlier.

This is a 10-year project, where phase 1 was research to identify choke points and barriers in to find some intervention initiatives, and phase 2 is a systematic implementation, transferable, sustainable, to track students into Uni. This had a strong scientific basis with emphasis on strong partnerships, leading to relationships with nearly 10% of the secondary schools in New Zealand, focused on the low decile groups that are found predominantly around Auckland. The partnerships were considered to be essential here and the good research was picked up and used to form good government policy – a fantastic achievement.

Another key aspect, especially from the indigenous perspective, was to get the families on board. By doing this, involving parents and family, guardian participation in activities shot up from 20% to 80% but it was crucial to think beyond the individual, including writing materials for families – parents and children. Families are the locus of change in these communities. Part of the work here involved transitions support for students to get from school to uni, supported by scholarships to show both the students and the community that they can learn and achieve to the same degree as any other student.

One great approach was that, instead of targeting the disadvantaged kids for support, everyone got the same level of (higher) support which normalised the student support and reduced the Otherness in this context.

The next project, the MAI programme, was a challenge to Māori researchers to develop a doctoral programme and support that didn’t ignore the past while still conforming to the academic needs of the present. (“Decolonizing methodologies: Research and Indigenous Peoples” by Linda Tuhiwai Smith, 1999, was heavily referenced throughout this.) Māori students have cultural connections and associations that can make certain PhD work very difficult: consider a student who is supposed to work with human flesh samples, where handling dead tissue is completely inappropriate in Māori culture. It is profoundly easy, as well as lazy, to map an expectation of conformity over the top of this (Well, if you’re doing our degree then you follow our culture) but this is the worst example of a colonising methodology and this is exactly what MAI was started to address.

MAI works through communities, meeting regularly. Māori academics, students and cultural advisors meet regularly to alleviate the pressures of cross-cultural issues and provide support through meetings and retreats.

The final project, the Māori PhD project, was initiated by MAI (above) to investigate indigenous students, to understand why they were carrying out their PhDs. Students were having problem, as with the tissue example above, so the project also provided advice to institutions and to students, encouraging Pākehā supervisors to work with Māori students, as well as the possibility of Māori supervision if the student needed to feel culturally safe. This was a bicultural project, with five academics across four institutions.

From Smith, 1997, p203, “educational battleground for Māori is spatial. It is about theoretical spaces, pedagogical spaces, structural spaces.” From this project there were differences in what the students were seeking and the associated pedagogies. Some where seeking difference from their own basis, an ancestral Māori basis. Some were Māori but not really seeking that culture. Some, however, were using their own thesis to regain their lost identity as Māori.

The phrase that showed up occasionally was a “colonised history” – even your own identity is threatened by the impact of the colonists on the records, memories and freedoms of your people. We had regularly seen colonists move to diminish and reduce the Other, as a perceived threat, where they classify it as a violent other. The third group of students, above, are trying to rebuild what it meant to be Māori for them, in the face of New Zealand’s present state as a heavily colonised country, where most advantage lies with the Pākehā and Asian communities. They were addressing a sense of loss, in the sense of their loss of what it meant to be Māori. This quest for Māori identity was sometimes a challenge to the institution, hence the importance of this project to facilitate bicultural understanding and allow everyone to be happy with the progress and nature of the study.

At this point in my own notes I wrote “IDENTITY IDENTITY IDENTITY” because it became clearer and clearer to me that this was the key issue that is plaguing us all, and that kept coming up at HERDSA. Who are we? Who is my trusted group? How do I survive? Who am I? While this issues, associated with Otherness in the indigenous community, are particularly significant for low SES groups and the indigenous, they affect all of us in this times of great change.

An issue of identity that I have touched on, and that Professor McKinley brought up in her talk, was how we establish the identity of the teacher, in order to identify who should be teaching. In Māori culture, there are three important aspects: Matauranga (Knowledge), Whakapapa (ancestral links) and Tikanga (cultural protocols and customs). But this raises pedagogical issues, especially when two or more of these clash. Who is the teacher and how can we recognise them? There are significant cultural issues if we seek certain types of knowledge from the outside, because we run headlong into Tikanga. These knowledge barriers may not be flexible at all, which is confronting to western culture (except for all of the secret barriers that we choose not to acknowledge). The teachers may be parents, elders, grandparents – recognising this requires knowledge, time and understanding. And, of course, respect.

Another important aspect is the importance of the community. If you, as a Māori PhD student, go to a community and ask them to answer some questions, at some stage in the future, they’ll expect you back to help out with something else. So, time management becomes an issue because there is a spirit of reciprocity that requires the returned action – this is at odds with restricted time for PhDs and the desire for timely completion if you have to disappear for 2 weeks to help build or facilitate something.

Professor McKinley showed a great picture. A student, graduating with PhD gown surmounted by the sacred cloak of the Māori people. They have to have a separate graduation ceremony, as well as the small ‘two tickets maximum’ one in the hall, because community and family pride is strong – two tickets maximum won’t accommodate the two busloads of people who showed up to see this particular student graduate.

The summary of the Other was that we have two views:

- The Other as a consequence of social, economic and/or political disaffiliation (Don’t pathologise the learning by diagnosing it as a problem and trying to prescribe a remedy.)

- As an alterity that is independent of social force. (Welcoming the other on their own terms. A more generous form but a scarier form for the dominant culture.)

What can we learn from the other? My difference matters to my institution. We need to ensure that we have placed our ethics into social justice education – this stance allows us how to frame ethics across the often imposed barriers of difference.

Professor McKinley then concluded by calling up some of her New Zealand colleagues to the stage, to close the talk with a song. An unusual (for me) end to an inspiring and extremely thought-provoking talk. (Sadly, it wasn’t Bob Dylan, but it was in Māori so it may have secretly been so!)

HERDSA 2012: President’s address at the closing

Posted: July 7, 2012 Filed under: Education | Tags: education, herdsa, higher education, identity, leadership, learning, measurement, reflection, thinking, university of western australia, work/life balance, workload Leave a commentThe President of HERDSA, Winthrop Professor Shelda Debowski, spoke to all of us after the final general session at the end of the conference. (As an aside, a Winthrop Professor, at the University of Western Australia, is equivalent to a full Professor (Level E) across the rest of Australia. You can read about it here on page 16 if you’re interested. For those outside Australia, the rest of the paper explains how our system of titles fits into the global usage schemes.) Anyway, back to W/Prof Debowski’s talk!

Last year, one of the big upheavals facing the community was a change at Government level from the Australian Learning and Teaching Council to the Office of Learning and Teaching, with associated changes in staffing and, from what I’m told, that rippled through the entire conference. This year, W/Prof Debowski started by referring to the change that the academic world faces every day – the casualisation of academics, disinterest in development, the highly competitive world in which we know work where waiting for the cream to rise would be easier if someone wasn’t shaking the container vigorously the whole time (my analogy). The word is changing, she said, but she asked us “is it changing for the better?”

“What is the custodial role of Higher Education?”

We have an increasing focus on performance and assigned criteria, if you don’t match these criteria then you’re in trouble and, as I’ve mentioned before, research focus usually towers over teaching prowess. There is not much evidence of a nuanced approach. The President asked us what we were doing to support people as they move towards being better academics? We are more and more frenetic regarding joining the dots in our career, but that gives us less time for reflection, learning, creativity, collegiality and connectivity. And we need all of these to be effective.

We’re, in her words, so busy trying to stay alive that we’ve lost sight of being academics with a strong sense of purpose, mission and a vision for the future. We need support – more fertile spaces and creative communities. We need recognition and acknowledgement.

One of the largest emerging foci, which has obviously resonated with me a great deal, is the question of academic identity. Who am I? What am I? Why am I doing this? What is my purpose? What is the function of Higher Education and what is my purpose within that environment? It’s hard to see the long term perspective here so it’s understandable that so many people think along the short term rails. But we need a narrative that encapsulates the mission and the purpose to which we are aspiring.

This requires a strategic approach – and most academics don’t understand the real rules of the game, by choice sometimes, and this prevents them from being strategic. You don’t stay in the right Higher Ed focus unless you are aware of what’s going on, what the news sources are, who you need to be listening to and, sometimes, what the basic questions are. Being ignorant of the Bradley Review of Australian Higher Education won’t be an impenetrable shield against the outcomes of people reacting to this report or government changes in the face of the report. You don’t have to be overly politicised but it’s naïve to think that you don’t have to understand your context. You need to have a sense of your place and the functions of your society. This is a fundamental understanding of cause and effect, being able to weigh up the possible consequences of your actions.

The President then referred to the Intelligent Careers work of Arthur et al (1995) and Jones and DeFilippi (1996) in taking the correct decisions for a better career. You need to know: why, how, who, what, where and when. You need to know when to go for grants as the best use of your time, which is not before you have all of the right publications and support, rather than blindly following a directive that “Everyone without 2 ARC DPs must submit a new grant every year to get the practice.”

(On a personal note, I submitted an ARC Discovery Project Application far too early and the feedback was so unpleasantly hostile, even unprofessionally so, that I nearly quit 18 months after my PhD to go and do something else. This point resonated with me quite deeply.)

W/Prof Debowski emphasised the importance of mentorship and encouraged us all to put more effort into mentoring or seeking mentorship. Mentorship was “a mirror to see yourself as others see you, a microscope to allow you to look at small details, a telescope/horoscope to let you look ahead to see the lay of the land in the future”. If you were a more senior person, that on finding someone languishing, you should be moving to mentor them. (Aside: I am very much in the ‘ready to be mentored’ category rather than the ‘ready to mentor’ so I just nodded at more senior looking people.)

It is difficult to understate the importance of collaboration and connections. Lots of people aren’t ready or confident and this is an international problem, not just an Australian one. Networking looks threatening and hard, people may need sponsorship to get in and build more sophisticated skills. Engagement is a way to link research and teaching with community, as well as your colleagues. There are also accompanying institutional responsibilities here, with the scope for a lot of social engineering at the institutional level. This requires the institutions to ensure that their focuses will allow people to thrive: if they’re fixated on research, learning and teaching specialists will look bad. We need a consistent, fair, strategic and forward-looking framework for recognising excellence. W/Prof Debowski, who is from University of Western Australia, noted that “collegiality” had been added to performance reviews at her institution – so your research and educational excellence was weighed against your ability to work with others. However, we do it, there’s not much argument that we need to change culture and leadership and that all of us, our leaders included, are feeling the pinch.

The President argued that Academic Practice is at the core of a network that is built out of Scholarship, Research, Leadership and Measures of Learning and Teaching, but we also need leaders of University development to understand how we build things and can support development, as well as an Holistic Environment for Learning and Teaching.

The President finished with a discussion of HERDSA’s roles: Fellowships, branches, the conferences, the journal, the news, a weekly mailing list, occasional guides and publications, new scholar support and OLT funded projects. Of course, this all ties back to community, the theme of the conference, but from my previous posts, the issue of identity is looming large for everyone in learning and teaching at the tertiary level.

Who are we? Why do we do what we do? What is our environment?

It was a good way to make us think about the challenges that we faced as we left the space where everyone was committed to thinking about L&T and making change where possible, going back to the world where support was not as guaranteed, colleagues would not necessarily be as open or as ready for change, and even starting a discussion that didn’t use the shibboleths of the research-focused community could result in low levels of attention and, ultimately, no action.

The President’s talk made us think about the challenges but, also, by focusing on strategy and mentorship, making us realise that we could plan for better, build for the future and that we were very much not alone.