First year course evaluation scheme

Posted: January 22, 2016 Filed under: Education, Opinion | Tags: advocacy, aesthetics, assessment, authenticity, beauty, design, education, educational problem, ethics, higher education, learning, reflection, resources, student perspective, teaching, teaching approaches, time management 2 CommentsIn my earlier post, I wrote:

Even where we are using mechanical or scripted human [evaluators], the hand of the designer is still firmly on the tiller and it is that control that allows us to take a less active role in direct evaluation, while still achieving our goals.

and I said I’d discuss how we could scale up the evaluation scheme to a large first year class. Finally, thank you for your patience, here it is.

The first thing we need to acknowledge is that most first-year/freshman classes are not overly complex nor heavily abstract. We know that we want to work concrete to abstract, simple to complex, as we build knowledge, taking into account how students learn, their developmental stages and the mechanics of human cognition. We want to focus on difficult concepts that students struggle with, to ensure that they really understand something before we go on.

In many courses and disciplines, the skills and knowledge we wish to impart are fundamental and transformative, but really quite straight-forward to evaluate. What this means, based on what I’ve already laid out, is that my role as a designer is going to be crucial in identifying how we teach and evaluate the learning of concepts, but the assessment or evaluation probably doesn’t require my depth of expert knowledge.

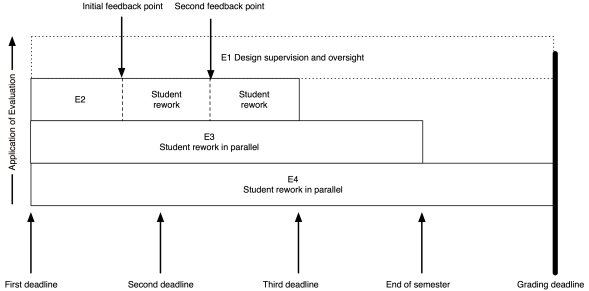

The model I put up previously now looks like this:

My role (as the notional E1) has moved entirely to design and oversight, which includes developing the E3 and E4 tests and training the next tier down, if they aren’t me.

As an example, I’ve put in two feedback points, suitable for some sort of worked output in response to an assignment. Remember that the E2 evaluation is scripted (or based on rubrics) yet provides human nuance and insight, with personalised feedback. That initial feedback point could be peer-based evaluation, group discussion and demonstration, or whatever you like. The key here is that the evaluation clearly indicates to the student how they are travelling; it’s never just “8/10 Good”. If this is a first year course then we can capture much of the required feedback with trained casuals and the underlying automated systems, or by training our students on exemplars to be able to evaluate each other’s work, at least to a degree.

The same pattern as before lies underneath: meaningful timing with real implications. To get access to human evaluation, that work has to go in by a certain date, to allow everyone involved to allow enough time to perform the task. Let’s say the first feedback is a peer-assessment. Students can be trained on exemplars, with immediate feedback through many on-line and electronic systems, and then look at each other’s submissions. But, at time X, they know exactly how much work they have to do and are not delayed because another student handed up late. After this pass, they rework and perhaps the next point is a trained casual tutor, looking over the work again to see how well they’ve handled the evaluation.

There could be more rework and review points. There could be less. The key here is that any submission deadline is only required because I need to allocate enough people to the task and keep the number of tasks to allocate, per person, at a sensible threshold.

Beautiful evaluation is symmetrically beautiful. I don’t overload the students or lie to them about the necessity of deadlines but, at the same time, I don’t overload my human evaluators by forcing them to do things when they don’t have enough time to do it properly.

As for them, so for us.

Throughout this process, the E1 (supervising evaluator) is seeing all of the information on what’s happening and can choose to intervene. At this scale, if E1 was also involved in evaluation, intervention would be likely last-minute and only in dire emergency. Early intervention depends upon early identification of problems and sufficient resources to be able to act. Your best agent of intervention is probably the person who has the whole vision of the course, assisted by other human evaluators. This scheme gives the designer the freedom to have that vision and allows you to plan for how many other people you need to help you.

In terms of peer assessment, we know that we can build student communities and that students can appreciate each other’s value in a way that enhances their perceptions of the course and keeps them around for longer. This can be part of our design. For example, we can ask the E2 evaluators to carry out simple community-focused activities in classes as part of the overall learning preparation and, once students are talking, get them used to the idea of discussing ideas rather than having dualist confrontations. This then leads into support for peer evaluation, with the likelihood of better results.

Some of you will be saying “But this is unrealistic, I’ll never get those resources.” Then, in all likelihood, you are going to have to sacrifice something: number of evaluations, depth of feedback, overall design or speed of intervention.

You are a finite resource. Killing you with work is not beautiful. I’m writing all of this to speak to everyone in the community, to get them thinking about the ugliness of overwork, the evil nature of demanding someone have no other life, the inherent deceit in pretending that this is, in any way, a good system.

We start by changing our minds, then we change the world.

I’m curious about the time scale you’ve used with this model and the granularity of assignments (in an intro CS context). How far apart are your deadlines, for example? What grading turnaround have you found feasible for the E2 component, and with what ratio of E2 staff to students?

Your model reflects some of what I’ve been trying in my large intro CS courses, but I find the bottleneck is the turnaround time for the E2 staff. Obviously, assignment granularity is a factor here, so I’d be interested to understand how you instantiate this model around actual assignments and deadlines. What have you found feasible?

(My context is unusual in that we teach courses in 7-week timeframes, rather than semester-length timeframes. Human timing bottlenecks are critical at that pacing.)

thanks,

Kathi

LikeLike

Hi Kathi,

Thanks for the question – it’s one that I will be writing more on because it’s the most pragmatic concern to get this right.

Right now, the tension is between the requirement to get enough assignments and having the staff to do that to the right level. From looking at how my markers operate, I can scale most things (by adding more markers) to get full evaluation and feedback within three days. This includes the time to brief, check mark, report back for clarification and then deliver full marking.

When we used to assess free text puzzle solutions for 360 students, we used a team of markers and this worked at this scale. We weren’t using a “deliver immediately for rework” approach at that point but, in terms of E2 scale, I do have evidence from a challenging marking task (rubric plus some human interpretation) that we can do this reliably within three days and still have enough time for E1 oversight.

Thus, we can look at a calendar week as forming an appropriate frame for one full iteration of “submit/E2 mark/feedback delivery/rework/resubmit” where the students get some days to rework. If the rework options were quick hits or your E2s can mark faster (and you are still happy with the quality) there’s no reason that this couldn’t shrink.

One cost-saving can be pre-processing through E3 and E4 automation to provide the E2s with things that are more focused towards their skills and abilities. My suggestion would be to put these into the submission system up front, to make it clear if the work is even ready to go to E2s so students know immediately that they need to keep working. Otherwise, the ‘delayed synchronised’ response will keep them on tenterhooks unnecessarily.

It’s what works for you, on the understanding that the commitment to this scheme implicitly comes with a trade-off that you might have fewer assessments of this type but the results should be better and you should more easily achieve what you sought to provide for the students.

I teach a similar structure in intensive mode as part of off-shore teaching and I’m finding that shorter timeframe is making me question how much I can really do, if I want to do things the way that I’ve set out.

I look forward to hearing more about your course!

Thanks,

Nick.

LikeLike